Key insights from FIMA Europe: break data silos, boost quality, and unlock the full potential of real-time data to transform financial services by 2025/26.

James White

26 nov. 2024

The financial services industry is experiencing a major data transformation, with enterprise data management emerging as the biggest opportunity for innovation in the coming years. Last week, I attended the FIMA event on Financial Data Innovation in London, where I had the opportunity to participate in an Innovation Fireside Chat to talk about the benefits of implementing a modern data platform. We explored how financial organizations can overcome challenges such as fragmented architectures and poor data quality to unlock the full potential of data. Here are some key takeaways from the discussion.

Data Management Leads the Innovation Agenda

Audience polling during the event revealed that data management is poised to be the most significant driver of innovation for investment banks and asset managers in 2025/26—even ahead of the buzz around generative AI. This reflects the growing recognition that foundational improvements in how data is integrated, secured, governed, and exploited are critical to achieving transformative business outcomes.

There was agreement across all the keynote speakers that “innovation will only work with good quality data.” But, as the head of data architecture at HSBC put it: “Data quality is still addressed as an afterthought.”, highlighting the need for organizations to prioritize quality and governance from the outset, rather than addressing issues reactively.

Fragmented, Siloed, and Complex: The State of Data in Financial Services

When asked to describe their current data architecture, participants repeatedly pointed to three words: fragmented, siloed, and complex. These characteristics underscore the operational and cultural challenges that financial institutions face in breaking down barriers to data access and collaboration.

At Conduktor, I see firsthand how these issues hinder the potential of enterprise data as a strategic asset in an organization. I also see how the rise of AI has increased the need for real-time, high-quality data, requiring organizations to rethink governance and security.

Traditionally, controls like data quality and compliance have been applied downstream in data lakes or warehouses. However, modern demands call for a ‘shift-left’ approach, pushing those controls up to the streaming layer, ensuring data is protected and compliant at source, enhancing its utility for data processing and analytics, and avoiding downstream inefficiencies.

The shift isn’t without challenges: immature tooling, performance trade-offs, and skill gaps in distributed systems such as Apache Kafka often slow progress. Large organizations face further hurdles with inconsistent processes, redundant resources, and unclear ownership. Problems that only get worse when organizations use a mixture of cloud and on-premise infrastructure.

To address this, many are moving toward a federated model—a balance of central oversight for compliance with decentralized autonomy for innovation. 60% of attendees at the FIMA event said they were either fully invested or considering a move towards a data mesh, but this can be a long road (both technically and culturally) that requires the right balance of federated governance vs. centralization.

Data Innovation Opportunities in Banking and Asset Management

Historically, real-time data in financial services has been primarily associated with credit decisioning and fraud detection. While these remain critical applications, organizations are increasingly expanding the use of real-time data.

From gaining operational insights to optimizing resource allocation, real-time data streaming is becoming essential for understanding and responding to the fast-paced financial landscape. For example, by ensuring timely, high-quality data flows across systems, institutions can make more informed decisions and deliver better outcomes for their customers.

Managing Sensitive/PII data in real-time streaming operations

One customer example I discussed during the session last week involved a Swiss financial services company navigating a cloud migration under strict encryption requirements. By leveraging our proxy architecture, they were able to enforce encryption centrally without requiring extensive application-level changes. This approach allowed them to meet regulatory deadlines while maintaining flexibility for future innovation.

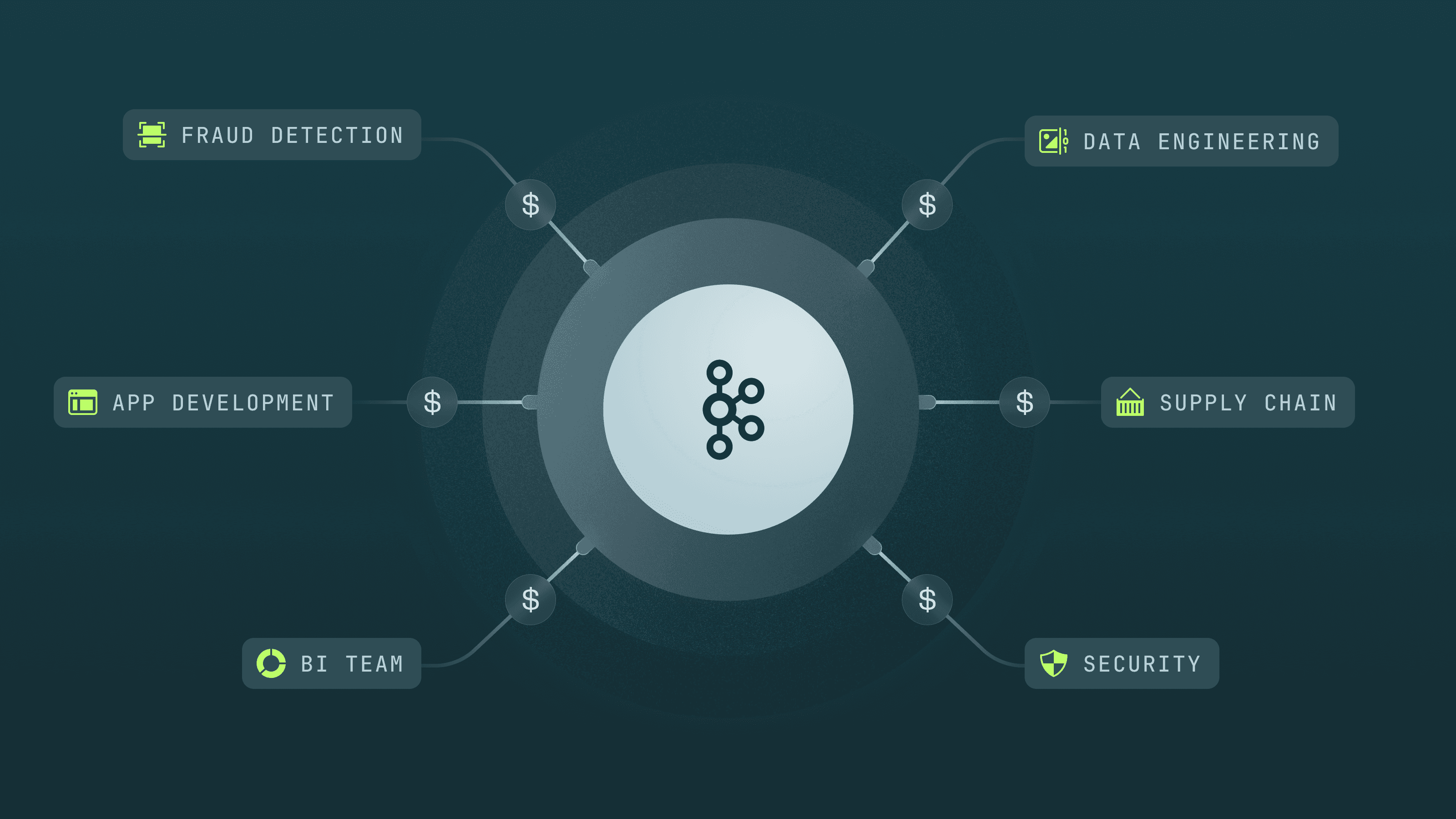

Empowering teams to deliver value, faster with a federated control framework

One of Europe’s top financial institutions, managing assets exceeding €1 trillion, is driving data innovation and accelerating project delivery through a federated governance framework. By equipping platform teams to enforce centralized standards while giving developers ownership of their data and applications, they eliminate operational bottlenecks and foster autonomy. This balanced approach is expected to boost productivity by at least 30% and accelerate project delivery by 25%, showcasing how federated data governance can unlock value while maintaining compliance.

Bridging Legacy Systems with Modern Data Architectures

For organizations reliant on legacy systems like mainframes—still used for critical functions by 70% of Fortune 500 companies—unlocking the value of their data is a significant challenge. Legacy data is often inaccessible to modern applications, but streaming and middleware solutions provide a seamless way to extract, process, and share this data across new systems.

Tools like Kafka Connect enable data extraction from incompatible legacy systems, while Apache Flink unifies batch and stream processing to support real-time use cases. This approach allows businesses to decouple old and new environments, ensuring legacy systems remain operational while new systems consume and process data asynchronously. By enabling an incremental migration path, organizations can modernize at their own pace, reducing costs and ensuring smooth data flows between legacy and modern architectures.

The Road Ahead

For financial services organizations looking to modernize their data platforms, the key takeaway from the Fireside Chat is clear: focus on foundational elements like data quality, governance, and architecture. As you plan for 2025/26, here are three actionable recommendations:

Prioritize Data Quality Early: Don’t treat it as an afterthought—embed governance and quality controls into your data pipeline from the start.

Break Down Data Silos: Foster cross-functional collaboration to address fragmentation and build a unified approach to data management.

Leverage Real-Time Streaming Beyond the Usual Use Cases: Expand the scope of real-time data to enhance operational insights and drive better decisions.

If you’d like to learn more about how Conduktor helps companies like ING, Capital Group or Credit Agricole, reach out to us. Together, we can turn your data into a true competitive advantage.