Kafka excels at moving data in-transit, but not so much about protecting data at rest. Let's delve into the current state of affairs exploring a typical scenario you may find in your organization, and our solutions to this challenge.

5 juin 2023

Kafka: from Technical POC to Serious Business.

Picture this: Kafka, an exceptional platform that enables seamless data sharing across an entire enterprise. It all starts innocently enough, with a team tackling a simple use case - perhaps log management or telemetry.

As their confidence grows and their proficiency in Kafka deepens, it's time to level up. The stakes increase as the team moves onto business applications, a progression that's especially rapid if your Kafka is cloud-based.

Then, because you are a serious business: it's time to tackle the security of your data.

Data privacy

Regulations

Data leakages

Cyber-threat

Phishing

...

There are so many reasons to encrypt data!

The Kafka Paradox: Stellar in Transit, Struggles at Rest.

Kafka excels in securing network communications with TLS or mTLS, setting up a fortress-like security system for your data during transit.

However, it falls short when it comes to data encryption, at the field-level, leaving your Personally Identifiable Information (PII) or General Data Protection Regulation (GDPR) data potentially exposed.

Encryption can also be your secret weapon in managing data repudiation within regulated environments. You can encrypt your data, and if there's a need to delete data, you can simply discard the key. This is what we call: Crypto Shredding!

Before this, engineers were duplicating data in new topics by building simple Spring Boot or Kafka Streams applications, just to remove the fields another team was not supposed to access. This need is gone now: the original topic is the single source of truth and the teams can wholly capitalize on the write-once/read-many pattern (Produce once — Consume many times). Each consumer application can be customized with varying visibility levels: you could either:

view the data with the encrypted field

or see partially decrypted data

or even fully decrypted data

In the end, this gap of not having native encryption in the Kafka Security model cannot be overlooked. When protecting sensitive data, field-level encryption is not just a luxury: it's a necessity, a requirement. It's the shield you need to safeguard your data from unauthorized access or breaches.

You must to equip yourself with the right tools to ensure comprehensive data security. As data continues to grow in importance and regulatory requirements become ever more stringent, effective field-level encryption is no longer optional — it's a must.

Time to Encrypt! From POC to Enterprise-Wide Adoption

Your CSO gave you a new mission: he will define some fields to be encrypted, and you must ensure the encryption and decryption of these fields are flawless in how your enterprise work with data. You are an advanced Software Engineer, time to write a library in Java!

The first version of your library might take shape quite swiftly. But don't forget, documentation is crucial and integration tests can be arduous.

Still, when the dust settles, there it is - your library in your preferred language, ready for deployment.

Version 1.0 is live. 👏

Time to add Schema Registry management

As the library spreads throughout teams, feedback starts rolling in to you. Top of the request list? Schema Registry management. You take it in stride and integrate support:

Avro

JSON Schema

Protocol Buffer

Your library is aware of the Schema Registry now and manages all data format! Obviously, you make sure this new version is retro-compatible with the existing version of your library. You don't want to break anything or force teams to migrate forcefully, yet.

Version 1.1 is born. 👏

The journey doesn't stop there. Your teams are using more and more complex data and spot a bug in your library: it does not support encryption on nested fields. Time to fix it. A necessary upgrade leads you to Version 1.2. 👏

Your Security team is aware of your cool library, which many teams in the company use. They want you to rely on the enterprise KMS (Key Management System) to have audit trails and be sure it's really secured from their perspective (they don't trust Software Engineers, as they know nothing about security, right?). They give you access to the KMS (and all the credentials and tokens coming with it), so you're adding its support to your library.

You're on a roll!

Python enters the game

Enter new users, requesting the library in their language of choice, such as Python. Damn, your library is in Java today. You don't know Python, you don't like the language, you need help. You enlist the help of a team familiar with Python, and soon you're recreating your library with their help, in a somewhat foreign language you don't want to learn.

Unstoppable, you develop Version 1.3 in both Java and Python and are decided to maintain both versions. 👏

A significant issue emerges during testing: data encrypted with the Python library aren't decryptable with the Java version. Time to add more end-to-end testing in your library, mixing languages, to be sure things are smooth.

In addition to handling language compatibility, another request lands on your desk: key rotation management. This necessitates a more complex interface contract and you decide to store keys in the headers of the records, going through your library.

As you adapt both libraries, a compatibility matrix becomes essential for your users, to know what they can or cannot support.

It's consuming way more time than you expected but intellectually, you like it! You are a necessity, your company needs you, you are delivering great business value! So you develop a great test harness, even if it's painful.

OMG, What I have done?

With your responsiveness and efficient updates, many teams have started using your library. You're doing your DevRel part and talking about it internally. People are genuinely interested, the excitement is growing.

However, as new teams and partners arrive, they bring along their language preferences like Go, C#, Rust, Python, KMS choices like Vault, Azure, GCP, AWS, Thales, and unique encryption definitions.

You look at your compatibility matrix, and it is now a behemoth. You still receive tickets from your first versions.

Suddenly, your efficient little library has become an enterprise linchpin, incurring substantial costs. You've probably created technical debt without intending to, and there's no easy way to backtrack.

Everybody is happy: except you

There's no sugarcoating it: you're drowning in an insurmountable workload. You're caught in a relentless storm of tasks, a tempest that shows no signs of abating. The sheer volume is overwhelming, each new task threatening to pull you deeper into the whirlpool of stress and exhaustion.

This isn't just about being busy but about facing a fundamentally unsustainable workload. The pressure is relentless, the stakes are high, so much data is going through your library. It's a challenging situation that demands a solution, and fast.

Do you recognize yourself here? We want to talk with you!

It's a tale of good intentions spiraling into a financial sinkhole from which it's challenging to extricate oneself. But despite the costly journey, the technical solution - your encryption library - is a resounding success, right?

However, the triumph of the library masks a more significant failure: Governance.

Governance? Which Governance?

The autonomy granted to teams to independently implement end-to-end encryption is a double-edged sword. In this freedom, two crucial issues have been overlooked:

KMS access management: secrets, tokens...

Encryption definition: where is the truth? who owns that?

With your library, every team needs to be equipped to access the KMS (custom access, password, Service Account...), which makes deployments more intricate and integration tests more challenging.

Who knows all the rules now? Where are stored the encryption definitions? Who owns them? Are you sure they are properly shared and used across the whole organization? How do you know? What happens when your CSO wants to add/remove one rule?

In essence, end-to-end encryption is not a solo endeavor - it's a team sport. It requires a coordinated effort, shared understanding, common goals, and ownership. Starting with a technical POC going to production is leading to such disaster, we have a solution to avoid all of it.

The Best way: a Seamless Kafka Experience

Conduktor's encryption capabilities are designed to ensure maximum data security in your Kafka ecosystem. It fits all the technical requirements we had mentioned above, without any of the technical or business downsides.

It supports key encryption standards, simplifying the implementation and management of secure data flows across your enterprise.

We also provide an exceptional governance system, designed to enhance efficiency and eliminate the challenges often associated with data encryption. Conduktor's end-to-end encryption requires no change from your applications: you can transition smoothly into a secure data environment, focusing on what truly matters: leveraging your data to drive your business forward.

Conduktor Gateway end-to-end encryption is a solution:

fully language agnostic (even work with

kafka-console-consumer.sh!)no problem with KMS governance; only the Gateway knows about it

no problem with secret management

no problem with interoperability

no problem with deployment, integration testing

...

Our solution is totally seamless for developers and applications: nothing to change, nowhere! This is a breeze as anything related to security is often highly sensitive and slow to put in motion. Not here!

Ops empower Software and Security Engineers

In our approach, we firmly adhere to the principle of role segregation. Each team has its unique focus, enabling seamless and efficient operations, free from overlap and confusion.

Developers hold the reins of their data without worrying about security. No more wrestling with libraries, no more versioning headaches, and compatibility across all languages - they can now fully immerse in their core responsibilities.

Security Engineers have a clear mandate: identify, tag, and apply tailored encryption rules. Everything they need to ensure the utmost data security is consolidated in one place, streamlining their tasks and enhancing effectiveness.

Ops Engineers are bridging the divide between these two groups. They facilitate seamless integration of the developers' and the security team's efforts, making the complex machinery of data operations invisible to the outside eye.

This efficient delineation of roles allows each team to excel in their respective areas, resulting in a well-tuned operation that delivers a secure, efficient, and seamless Kafka experience.

Talking is cheap, show me the code!

The Security team is defining centrally which field is encrypted, and how, and with which KMS. Below, an example of encrypting/decrypting the fields password and visa in the topic customers.

They define how these fields should be encrypted (on Request/Produce):

Then, it's time for the Developers to build the applications using their regular tools (Spring Boot, Python, Go, whatever!).

They are not aware of any encryption happening. They don't have to setup anything specific:

No custom serializer

No Java Agent

No custom library

No Kafka interceptor

Nothing particular, they just don't have a clue and they don't need to know.

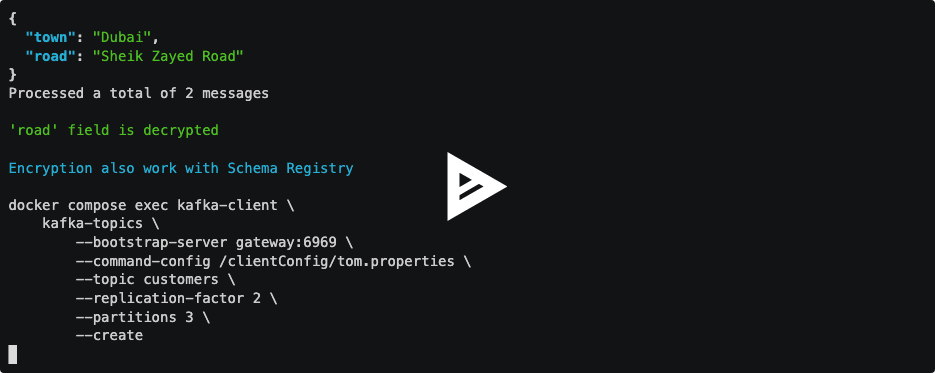

Let's go through some command lines representing the application anyone would develop for their business (creating topics, producing data, consuming data).

Developers create a topic

customers(the one the Security Team defined encryption rules on)

Developers are using JSON to produce into the

customerstopic:

We consume the same topic

customersand we notice it's encrypted:

The

passwordandvisafields are properly encrypted, magic happened!The Security Team forgot to specify how to decrypt these data when an application is requesting them (encryption rules can totally be separated from decryption rules), so they define how these fields should be decrypted (on Response/Fetch):

Developers can now validate the data are decrypted for them, seamlessly:

userandpasswordfields are decrypted, they didn't change a thing on their side, magic happened!

From an application perspective, the encryption/decryption stages are totally seamless, but from a security perspective: the data are encrypted at rest now!

This magic lies within Conduktor Gateway.

Check out this live demo to see more of it:

Conclusion

We have other surprises in store for you regarding Encryption, but this article is already too long! We hope you enjoyed this article and that it gave you some ideas for your Kafka infrastructure. Really, contact us if you want to discuss your use cases. We want to build out-of-the-box and innovative solutions for enterprises using Apache Kafka, so we are very interested in your feedback.

You can download the open-source version of Gateway from our Marketplace and start using our Interceptors or build your own!