Discover the biggest data streaming trends with insights on BYOC, decoupling storage and compute, and Shift Left strategies from Conduktor's fireside chat.

Oct 11, 2024

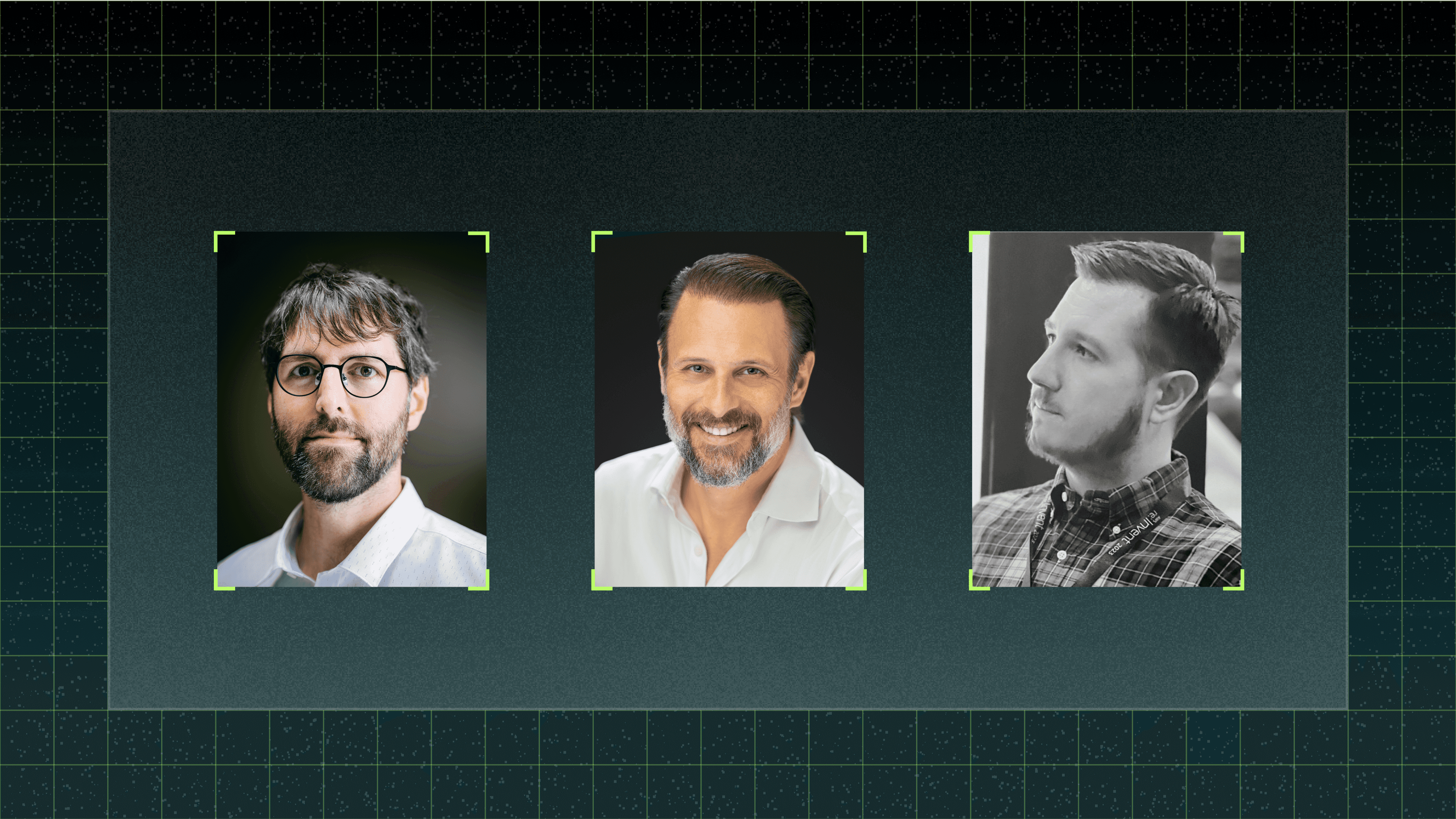

Data streaming is transforming at lightning speed, and keeping up with trends is essential for businesses navigating this fast-paced, evolving space. Conduktor recently launched the first episode of its Streamline: Conversations in Data Series, 'Breaking Down the Biggest Trends in Data Streaming.' Moderated by Quentin Packard, Conduktor’s Vice President of Sales, the discussion featured Philip Yonov, Head of Streaming Data at Aiven, and Stéphane Derosiaux, CTO & Co-Founder of Conduktor, as they explored the most significant trends transforming the data streaming landscape. Here’s what you need to know.

1. Bring Your Own Cloud (BYOC): The Future of Data Infrastructure

Data privacy and cost efficiency are paramount, and “Bring Your Own Cloud” (BYOC) is emerging as a critical solution. Philip and Stéphane emphasized that BYOC enables organizations to maintain control over their data while leveraging the cost savings of their chosen cloud providers. As data privacy concerns intensify, BYOC offers a secure, flexible deployment model.

“Streaming data is premium data, and BYOC allows for cost-effective infrastructure that doesn’t compromise on security,” said Philip. He also noted that BYOC is not just a trend but an essential tool for ultra-large infrastructure deployments where savings plans with cloud providers can significantly reduce costs. Stéphane added that the growing shift towards BYOC is partly fueled by the need to protect sensitive company data in the age of AI, where external platforms could potentially train on your data.

Key takeaway: BYOC offers companies greater flexibility and control, making it an ideal solution for organizations with large, sensitive data streams.

2. Decoupling Compute and Storage: Driving Cost Efficiency and Performance

With the ever-increasing volume of data, the separation of compute and storage has become essential for cost efficiency and performance. This decoupling allows organizations to scale their storage without a proportional increase in compute resources, optimizing their infrastructure for real-time data streaming.

Stéphane explained, “We keep accumulating massive amounts of data, but traditionally, storage and compute grew together, leading to inefficiencies. By decoupling them, we can store data on platforms like S3 while using lightweight, scalable compute resources only when necessary.”

Philip pointed out that decoupling enables better throughput optimization and cost control, particularly for real-time workloads. It also introduces the potential for more globally distributed streaming systems with higher availability.

Key takeaway: As data volumes continue to grow, decoupling compute and storage is a smart strategy to optimize performance and reduce costs.

3. Shift Left: Enhancing Data Quality and Security in Real-Time Streaming

The conversation shifted (no pun intended) to the popular “Shift Left” concept, which pushes data quality, security, and testing earlier in the development cycle. While the idea isn’t new, its importance in the context of real-time streaming and AI applications has grown significantly.

Philip offered a provocative take: “When I hear ‘Shift Left,’ I think, stop with the marketing! In reality, Kafka is already left—it operates in real-time and interacts with business processes at the microservice level.” He argued that the real opportunity lies in embracing “zero copy” architectures to minimize the movement of data across systems, which remains one of the most expensive operations in cloud environments.

Stéphane echoed the importance of shifting data quality control closer to the source, particularly in real-time environments where errors can propagate rapidly. He emphasized the growing need for tools that help developers manage both data security and data quality without overwhelming them.

Key takeaway: Shift Left isn’t just a buzzword—it's about empowering organizations to improve data quality and security earlier in the process, minimizing errors and boosting performance in real-time streaming systems.

What’s Next for Data Streaming?

As the fireside chat wrapped up, the conversation naturally gravitated toward the future of data streaming and the role of AI in transforming real-time data management. One of the most exciting developments highlighted was the potential for integrating large language models (LLMs) directly into streaming architectures.

Stéphane shared his vision: “Imagine plugging LLMs directly into your stream processing. As costs decrease and latency improves, it’s only a matter of time before we see AI-driven insights embedded within real-time streaming systems.”

Key takeaway: The future of data streaming lies in the intersection of AI and real-time processing, with emerging technologies like LLMs poised to revolutionize how we manage and derive insights from streaming data.

Conclusion

The trends discussed during the fireside chat—BYOC, decoupling compute and storage, and Shift Left—are shaping the future of data streaming. With AI and advanced processing techniques on the horizon, organizations that adopt these practices today will be well-positioned to leverage real-time data streams for competitive advantage.