The Real Problems with Apache Kafka: 10,000 Forum Posts Analyzed

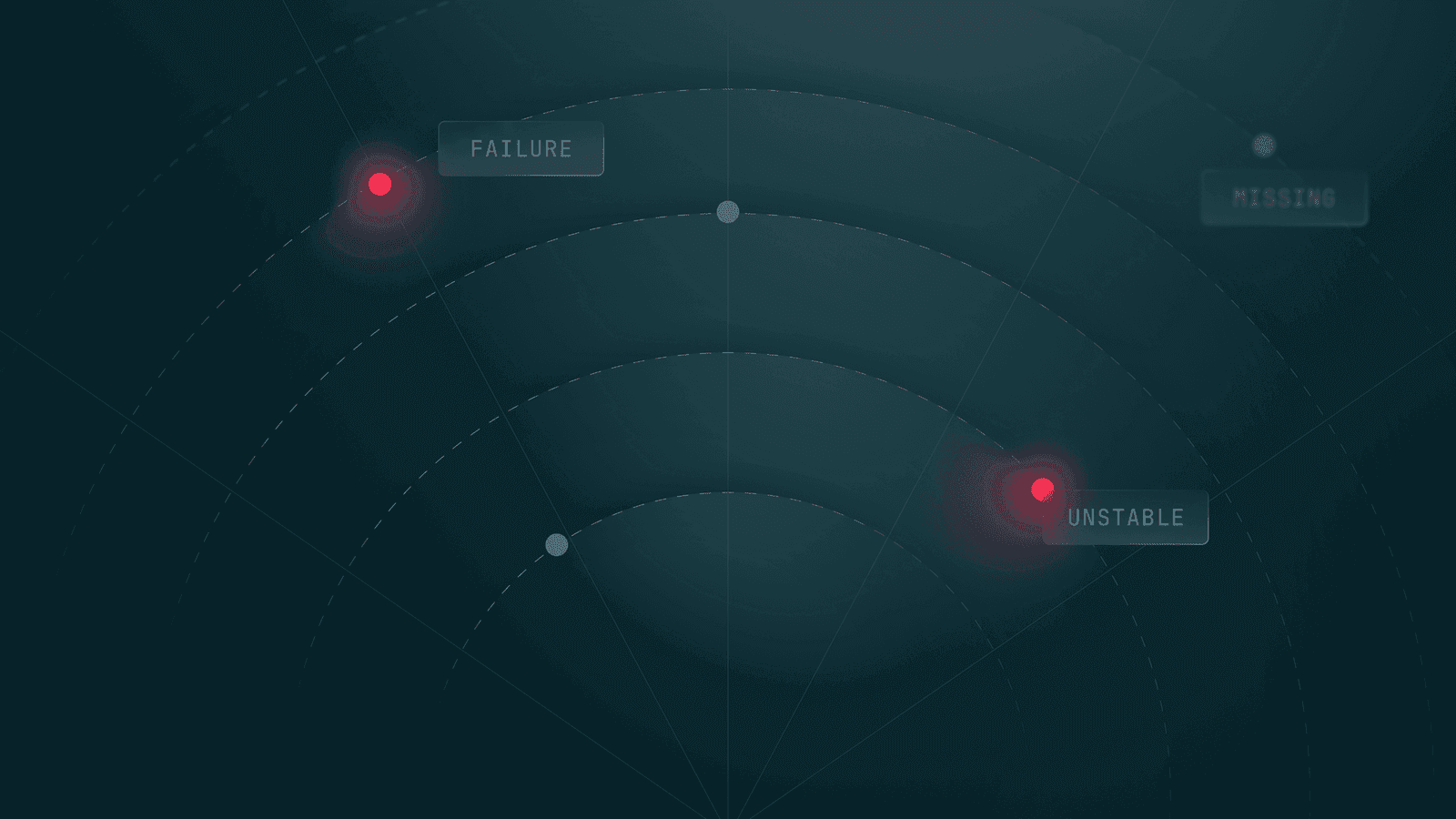

10,000+ Kafka forum posts reveal the truth: connector crashes, auth nightmares, schema failures, and cryptic errors plague production deployments.

Vendor marketing paints Kafka as effortless real-time data streaming. Conference talks showcase impressive use cases at massive scale.

But what happens when you actually try to run Kafka in production?

I analyzed thousands of posts on the Confluent Community Forum, the largest gathering place for Kafka practitioners. The pattern is clear: most organizations struggle with the same problems repeatedly.

This isn't a critique of Kafka. It's an honest look at the operational reality that rarely makes it into case studies. From authentication nightmares to connector failures, from configuration complexity to mysterious performance issues, real teams wrestle with these challenges every day.

1. Kafka Connect Causes the Most Pain

831 topics make this the most problematic area:

- Configuration Complexity: Connector setup is hard, especially for custom transforms and sink/source configurations

- Connector Failures: HTTP sink, JDBC sink, Elasticsearch sink, Lambda sink, and Debezium connectors fail frequently

- Authentication Problems: SASL/SSL configuration for internal schema history and connector authentication breaks constantly

- Plugin Management: Loading custom transforms and managing connector plugins fails with unhelpful errors

- Cryptic Error Messages: "Unable to manage topics" and "Class not found" appear without clear resolution paths

- Distributed Mode Struggles: Running Kafka Connect as distributed services in containers is fragile

2. Schema Registry Fails at the Worst Times

181 topics with recurring issues:

- Startup Failures: "Schema Registry failed to start" with timeout exceptions

- Schema Not Found: Missing schemas crash applications, especially after pod restarts

- Registration Errors: 42201 errors and schema incompatibility block deployments

- Certificate/SSL Issues: Bad certificate errors when producers try to register schemas

- Forwarding Errors: Multi-replica schema registry setups fail silently

- Integration Challenges: Connecting Schema Registry with different clients requires tribal knowledge

3. Production Latency Spikes Without Warning

Critical problems for production systems:

- High Latency: Users report 5 to 17 second latencies in Kafka Streams applications

- Producer Performance: Intermittent 5 to 10 second delays in producer operations

- Consumer Lag: Persistent lag issues, especially in CDC pipelines (45+ minute lags reported)

- Foreign Key Joins: KTable foreign key joins generate millions of internal records, killing performance

- Throughput Drops: Sudden performance drops during broker replacements or rebalancing

4. Cluster Operations Break in Predictable Ways

Cluster stability:

- Broker failures after reboots due to cluster ID mismatches

- Under-replicated partitions (URPs) persistently appearing

- Split segment errors requiring manual log clearing

- New brokers not properly joining existing clusters

ZooKeeper Issues:

- Cluster ID regeneration after VM restarts

- Synchronization problems between ZooKeeper and Kafka

KRaft Migration:

- ACL configuration problems in KRaft mode

- Authentication failures during KRaft setup

- Complex migration from ZooKeeper to KRaft

5. Security Configuration is Trial and Error

Authentication problems are extremely frustrating:

- SASL/SSL Configuration: Complex multi-step setup with frequent failures

- ACL Problems: "No Authorizer configured" errors in KRaft mode

- User:ANONYMOUS Issues: Unexpected anonymous user authentication attempts

- Certificate Chains: SSL handshake failures requiring certificate chain verification

- Mechanism Mismatches: SCRAM-SHA-256 not enabled when expected

- Mixed Configurations: Managing different security protocols across listeners requires guesswork

Security configuration provides minimal helpful error messages. Most teams iterate blindly until something works.

6. Consumer Groups Rebalance Constantly

- Frequent Rebalancing: Consumer groups rebalancing too often, causing disruptions

- Offset Reset Challenges: Unable to reset offsets for specific partitions

- Commit Failures: Offset commits failing with "group has already rebalanced" errors

- Uneven Distribution: Partitions distributed unevenly after consumer restarts

- Manual Offset Control: Controlling offset commits manually creates race conditions

7. Docker and Kubernetes Make Everything Harder

Containerization multiplies complexity:

- Network Configuration: Connection refused errors within Docker Compose

- Volume Mounting: Confusion about correct directories to mount

- Resource Permissions: User ID restrictions in OpenShift

- Image Registry: Failed to fetch images from Azure Container Registry

- Cluster ID Generation: Can't generate cluster IDs before containers start

- Storage Types: Block storage limitations with StatefulSets

- Helm Chart Confusion: Deprecated Helm charts and unclear migration paths to CFK

8. ksqlDB Query Limitations Surprise Users

- Pull Query Restrictions: Can't use GROUP BY in pull queries

- Error Messages: Unclear explanations of query limitations

- Schema Compatibility: Schema incompatibility when creating multiple tables on same topic

- Windowing Requirements: Unexpected requirements for GROUP BY with windowing

- Startup Failures: Connection errors and configuration issues

9. Confluent Cloud Billing and Integration Gaps

- Cost Visibility: Tags not appearing in billing CSVs or API

- Cost Forecasting: Estimating costs before implementation is nearly impossible

- Licensing Confusion: Unclear how self-managed connector licenses work with Cloud

- Marketplace Limitations: Can't provision through Azure Marketplace with CSP accounts

- Monitoring Integration: Exporting metrics to Prometheus, ELK, or CloudWatch requires workarounds

- CLI Issues: Backend errors with API key creation commands

10. Observability Requires Custom Solutions

- Metrics Export: Getting metrics into Prometheus, Grafana, or DataDog is hard

- Consumer Lag: Not a direct server metric, requires special handling

- JMX Access: JMX monitoring without Docker is underdocumented

- Log File Locations: Finding logs in containers requires archaeology

- Alert Configuration: Under-replicated partition alerts trigger too often

11. Upgrades Break Things

- Version Compatibility: Which client versions work with which broker versions is unclear

- Direct Upgrades: Uncertainty about skipping intermediate versions

- Breaking Changes: NoClassDefFoundError after upgrading clients

- Migration Tools: MirrorMaker 2.0 not copying data properly

- Schema Versioning: Reverting to older schema versions fails unexpectedly

- SSL Certificate Changes: Migration breaks SSL configurations

12. Disaster Recovery is Poorly Understood

- Replication Issues: Topics becoming under-replicated

- Failover Complexity: What happens during cluster linking failover is unclear

- MirrorMaker Challenges: Data deletion during switchover in active-passive setups

- Persistence Concerns: Guaranteeing no data loss in producer buffer requires deep knowledge

- /tmp Directory: Data loss risk when /tmp is cleared in KRaft deployments

- Backup Strategies: Best practices for backups and recovery are not well documented

13. Documentation Lags Behind Reality

- Outdated Tutorials: Commands in tutorials don't match current documentation

- Complex Configurations: Understanding interconnected configuration parameters requires trial and error

- Missing Examples: Complete, working examples for complex scenarios are rare

- Lab Environment Access: New users can't find lab environments mentioned in courses

- Non-Java Clients: Python, .NET, and Node.js clients have limited documentation

- Error Interpretation: Cryptic error messages lack clear resolution paths

Configuration Complexity is the Root Cause

The overwhelming majority of issues trace back to one root cause: configuration complexity. Whether it's Kafka Connect, Schema Registry, security, or basic broker setup, users are drowning in interdependent parameters with minimal validation and cryptic error messages. This isn't a few edge cases; it's the fundamental experience for most teams.

These pain points represent a massive opportunity for the ecosystem:

- Governance platforms that provide guardrails and validation before runtime failures

- Management tools that make complex configurations visual and testable

- Observability solutions that explain why things are failing, not just that they failed

- Education platforms that close the gap between documentation and reality

- Abstraction layers that handle the complexity so teams can focus on business value

This is exactly what conduktor.io provides.