Why Every Streaming Vendor Is Building a Kafka Proxy

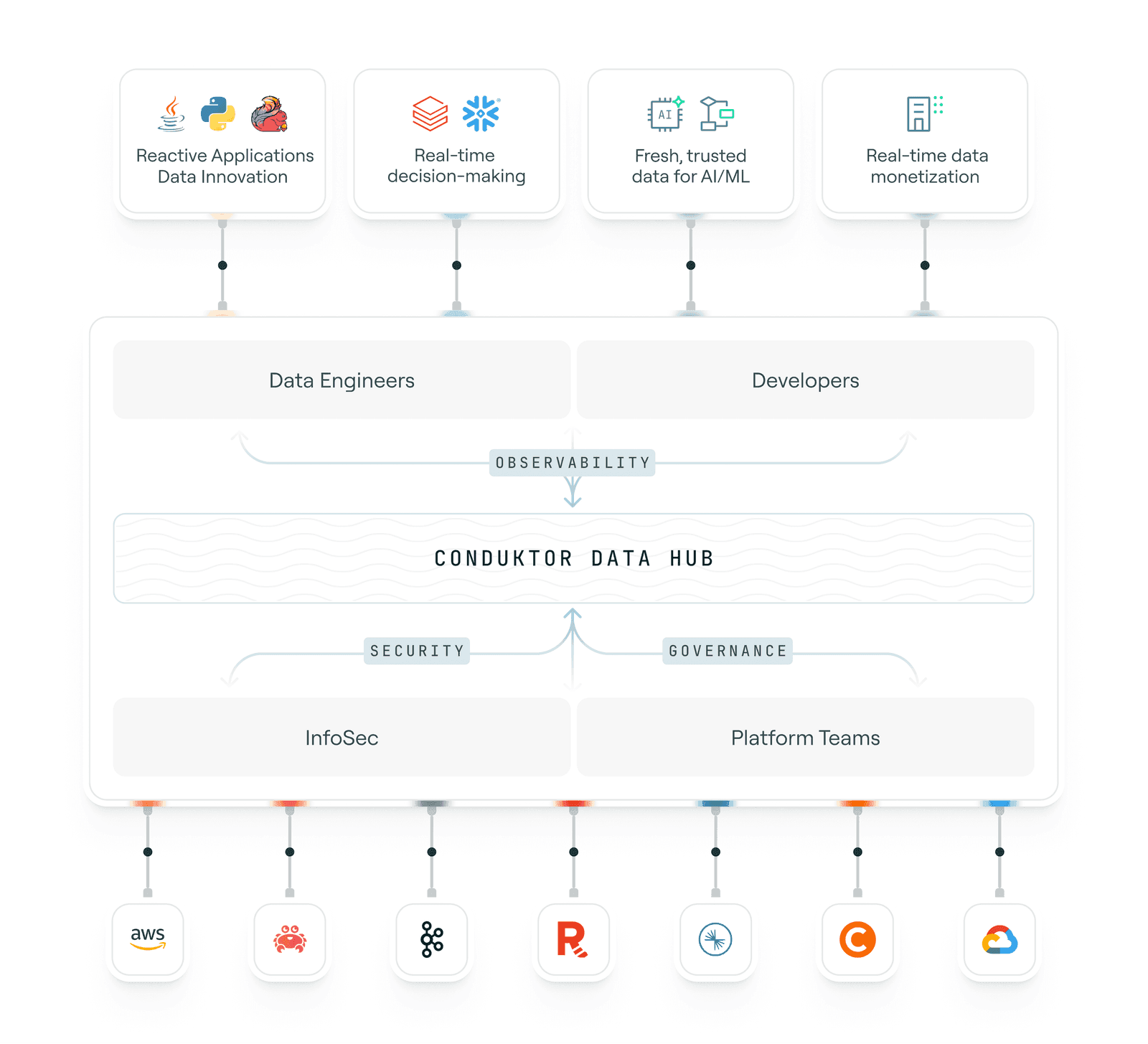

Streaming proxies enable governed self-service and AI-ready data at scale. Why Kafka control planes from Confluent, Kong, and Conduktor are converging.

At Current 2025 in New Orleans, Confluent announced ML functions and streaming agents for Flink, incremental materialized views for AI agent context, Confluent Private Cloud for on-premises requirements, and a Kafka proxy.

The Conduktor team at our Current booth.

The Conduktor team at our Current booth.

The proxy pattern is spreading across the streaming ecosystem. For years, streaming vendors competed on performance. Now they're building the same thing: a control plane on top of streaming. Confluent announced its gateway, a transparent proxy layer for cross-cluster routing based on Kroxylicious, the CNCF Kafka proxy from Red Hat. Kong and Gravitee entered the space recently.

Everyone is shipping a proxy.

When we launched the first enterprise-grade proxy for Kafka in 2022, the idea was radical. We built it to protect Kafka from bad practices and govern heterogeneous ecosystems at scale, providing the first dedicated control plane for streaming.

In 2022: "Single point of failure!" "Latency!" Now, that same idea has become the new architectural layer for data streaming, not a nice-to-have but an integration layer on top of the streaming infrastructure.

The industry has realized that governance, not infrastructure, is the bottleneck (especially with AI models that need all the data). The proxy is an architectural control point that removes this bottleneck.

Three Problems Streaming Organizations Face

- Governance vs velocity: How do you maintain control without introducing a bottleneck?

- Compliance vs delivery speed: Why does security still mean slowing everything down?

- Visibility vs cost: Who's using what, and what is it actually worth?

These aren't new problems. What's new is that the needs have multiplied: not just in data volume, but in pattern complexity, heterogeneity of infrastructure, and scale across regions.

Streaming is mainstream, well understood, and easy to get quick value from (though "quick" does not mean "good"). There is a growing need for automation: pattern detection, policy enforcement, and adaptive governance. Fewer humans in the loop.

We escaped the analytics silos only to stumble into streaming silos: multiple clusters, cloud boundaries, lack of infrastructure flexibility. Streaming at scale demands a unified control plane. Enterprises are discovering they need it.

Why Traditional Governance Fails Streaming Teams

Governance was designed to protect us and our data. Somewhere along the way, it became an anchor holding teams back rather than an enabler helping them get things done. The results: unused tooling piling up, lack of coordination, and lack of "governance culture."

Ask yourself: do your application teams actually use Collibra, or is it just your isolated governance team?

The proxy introduces a connective layer between heterogeneous systems, decoupling control from infrastructure. Lack of governance is detected and can become code.

When we built Conduktor's proxy in 2022, it wasn't born from theory. It was to cover a large gap in the market. We watched everyone struggle to make Kafka usable and safe at scale without an army of professional services to fine-tune it and educate teams. It was acceptable to move fast, break things quickly, and keep applying patch after patch while everyone kept attacking Kafka.

The proxy changed that. Sitting between apps and clusters, it became the single enforcement layer: managing access, encryption, auditing, and masking without touching producers or consumers. The goal was simple: keep Kafka open, but make it governable. What started as a technical conversation ("single point of failure", "latency") evolved into business conversations: a way to enable and spread streaming faster.

Why Streaming Growth Is Slowing (And What To Do About It)

Most organizations aren't failing because their streaming tools are bad. They're failing because their operating models are.

Kafka promised freedom: the freedom to move data. But learning how to use it well, understanding how it works, managing access, identifying the data flowing inside, all this requires organizational maturity. It's not a tech problem.

As Stanislav Kozlovski's recent piece on streaming's plateau points out, we're seeing growth deceleration across the board. Confluent's cloud growth is slowing. Stream processing adoption remains niche (Flink generated just ~$14M ARR for Confluent in Q3, barely 1.25% of their business). The industry isn't shrinking, but it's not in hypergrowth mode anymore.

This is a maturity correction. The infrastructure is fine; the governance isn't. Enterprises (or their providers) have mastered running Kafka but are not using it well.

The proxy pattern (combined with true self-service capabilities) is how you move past this bottleneck. It's the natural correction to years of over-centralized complexity. It's an evolution from "infrastructure-first" to "control-first." Governance becomes dynamic, enforced at the edge, and has "shifted left." Streaming architecture can now balance innovation with discipline.

How AI Agents Will Change Streaming Requirements

If 2020 was the decade of "data in motion," 2025 is the dawn of decisions in motion. The Current keynote hit hard on agent-driven workflows and AI-native architectures. Confluent Intelligence, Streaming Agents, and Real-Time Context Engine all position data streaming as the foundation for the next wave of AI applications.

Is streaming the backbone for agent-driven workflows?

As enterprises adopt agentic systems, the need for real-time, secure, on-demand access to data will only grow. Agents can't wait for batch jobs. They can't wait for slow access approval. They need data access and context now: streamed, filtered, and audited.

I'm a big fan of Kafka's new queue semantics for this. Queues provide concurrency and reliability; proxies provide access, trust, and governance. Together, they form the nervous system of AI: a live data fabric where every decision is observable, auditable, and compliant by design. I spoke about this during my talk at Current, using a chess game as a metaphor for distributed decision-making with LLMs.

Most enterprises aren't there yet, but AI is forcing data governance to grow up.

MCP as the Next Layer: Intent-Based Data Access

Imagine a control plane that doesn't just enforce policy but understands intent. An agent or application doesn't ask where the data lives. It asks what it needs: "customer transactions," "fraud alerts," "active sessions." The MCP layer, built on top of the proxy, resolves the source dynamically, applies transformations, masks sensitive fields, and enforces access without the client knowing where the data resides.

This is the natural extension of what proxies already do. They're the connective tissue between applications and streams, with full visibility into requests, flows, and access patterns. They already see everything. The next step is teaching them to reason.

When that happens, data stops being a location problem and becomes a context problem. The proxy becomes a gatekeeper, translator, and policy engine in one: the foundation for MCP with dynamic, least-privilege, on-demand access for humans, services, and AI agents alike.

As data architectures fragment across clouds and AI agents multiply, static pipelines will collapse. The next generation of systems will demand adaptive control, policy that travels with the request, not the infrastructure.

The Real Problem Is Still Basic Streaming Operations

As Yaroslav Tkachenko observed in his Current recap, there's skepticism about whether we're in an AI bubble and that streaming is trying to escape into it. I share that skepticism: working with tons of customers, this is not where the problem is today. There is still a huge need to simply stream data reliably, govern it precisely, enable self-service safely, and innovate faster without losing control. We talk about AI on one side, but the reality is struggling with cost, partitions, and understanding how data flows (or should flow).

Since 2022, our mission has been to help customers get more out of what they already have. By making existing systems easier to use, safer to share, and simpler to govern, we enable teams to move data freely and securely to maximize infrastructure usage and turn streaming into an engine of innovation.

In streaming's great convergence, one truth stands: control is the new compute. And the proxy is where it begins.