Real-Time AI Requires Kafka and Guardrails

Discover how Kafka and Flink power real-time AI agents, enabling autonomous decision-making, adaptive intelligence, and scalable data streaming.

AI Fails Without Fresh Data

AI is transforming customer support, software development, fraud detection, and autonomous vehicles. But without data continuity, AI becomes useless fast.

- AI is only as good as the data it consumes. Outdated, incomplete, or incorrect data causes AI models to fail.

- AI without real-time data is like driving with last week's GPS updates.

From my experience as a CTO, I've seen AI projects fail because companies focus only on speed. Speed means nothing if you don't control:

- The quality of data AI consumes

- The security and privacy of AI outputs

- How AI decisions impact the business

Kafka and AI guardrails solve these problems. Without them, AI becomes unreliable, unscalable, and a liability.

AI Without Guardrails Exposes Your Organization

One of AI's biggest hidden dangers is the lack of built-in governance. When AI models handle customer data, automate processes, or make decisions, serious risks emerge:

- Data leaks and prompt leaks

- Privacy breaches and compliance violations

- Bias and discrimination

- Out-of-domain prompting and hallucinations

- Poor or misleading decision-making

In March 2023, OpenAI had a bug that exposed user chat data. This is what happens when AI lacks proper security, authentication, and authorization controls.

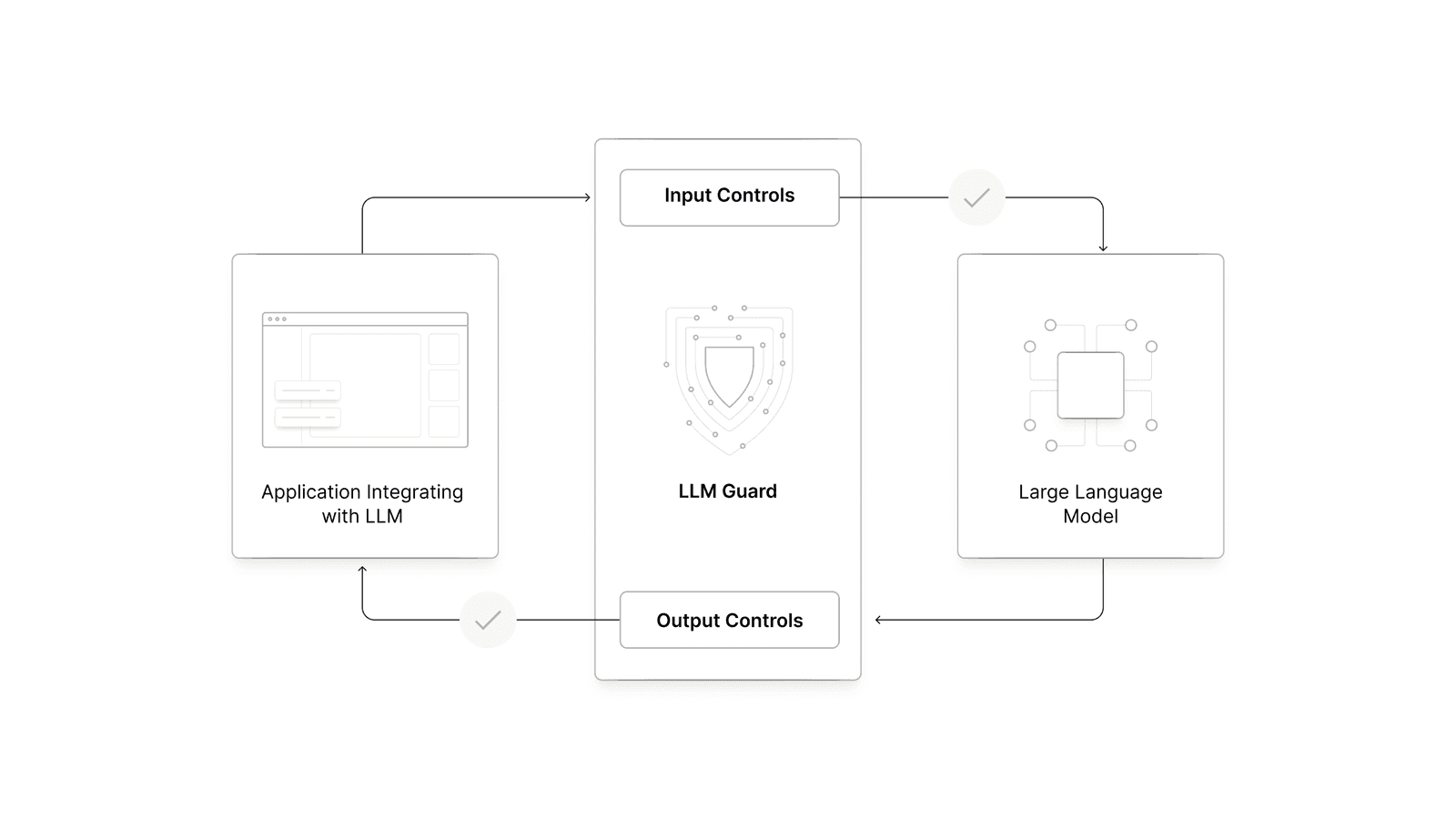

How AI Guardrails Filter Bad Inputs and Outputs

Guardrails like NeMo (NVIDIA) and LLM Guard act as a control layer, filtering and validating AI inputs and outputs. They prevent:

- Prompt injections

- Toxic and biased content

- Privacy leaks (personal data exposure)

- Hallucinations and misinformation

- Jailbreak attempts

Want to test AI vulnerabilities? Check out Garak, a tool that scans LLMs for data leakage, hallucination, prompt injection, and other weaknesses.

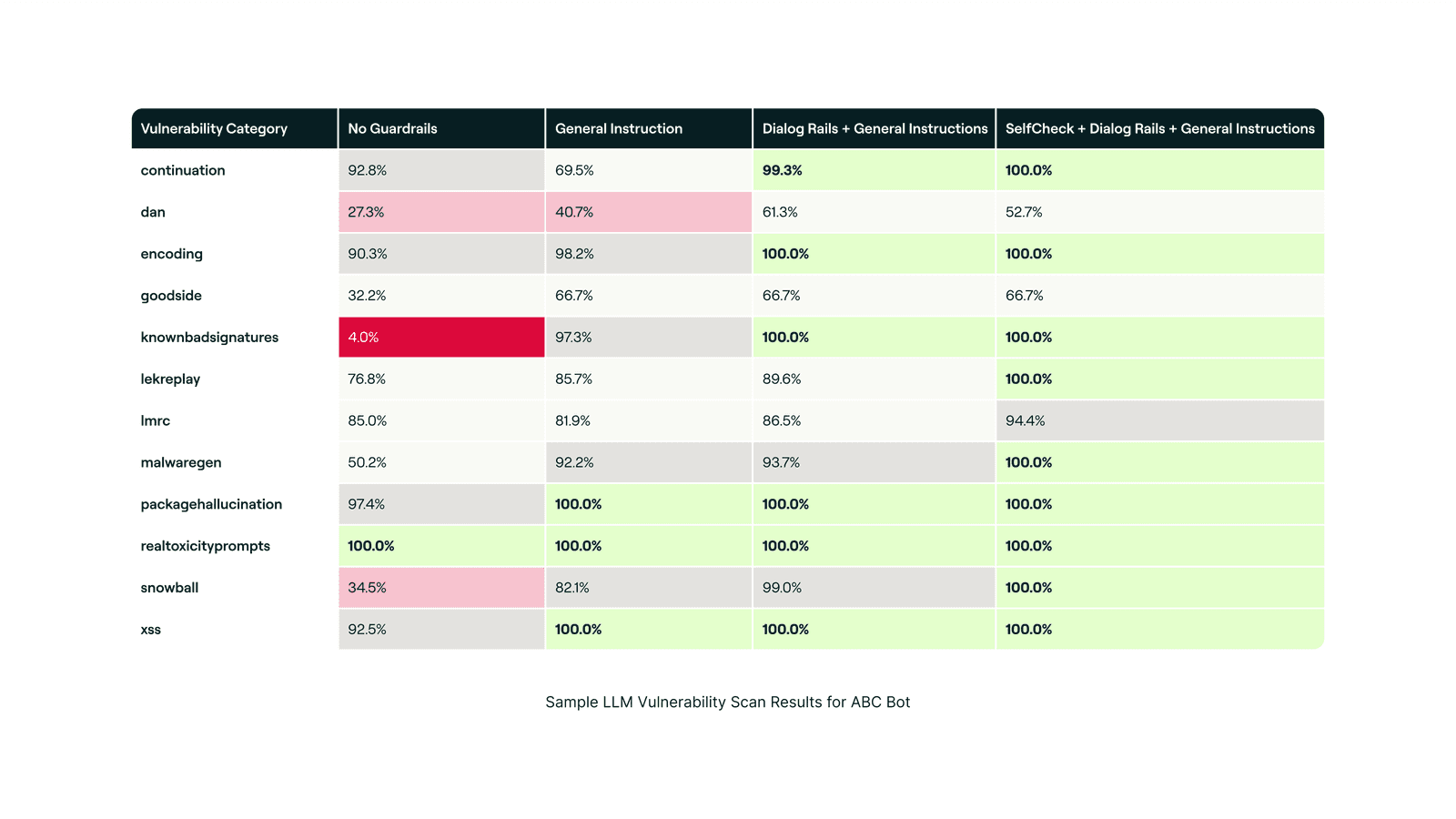

Here is the positive impact of NeMo on a typical AI-bot example against various LLM vulnerabilities:

Why Kafka is the Foundation for Real-Time AI

The biggest misconception in AI: a great model is all you need. AI is only as good as the data behind it. Feeding AI static datasets is a recipe for failure.

Customer Support AI Example

A chatbot helping customers works off outdated billing data. A customer calls about an invoice, and the AI can't retrieve real-time updates. It gives the wrong answer. The customer gets frustrated. You've lost trust and revenue.

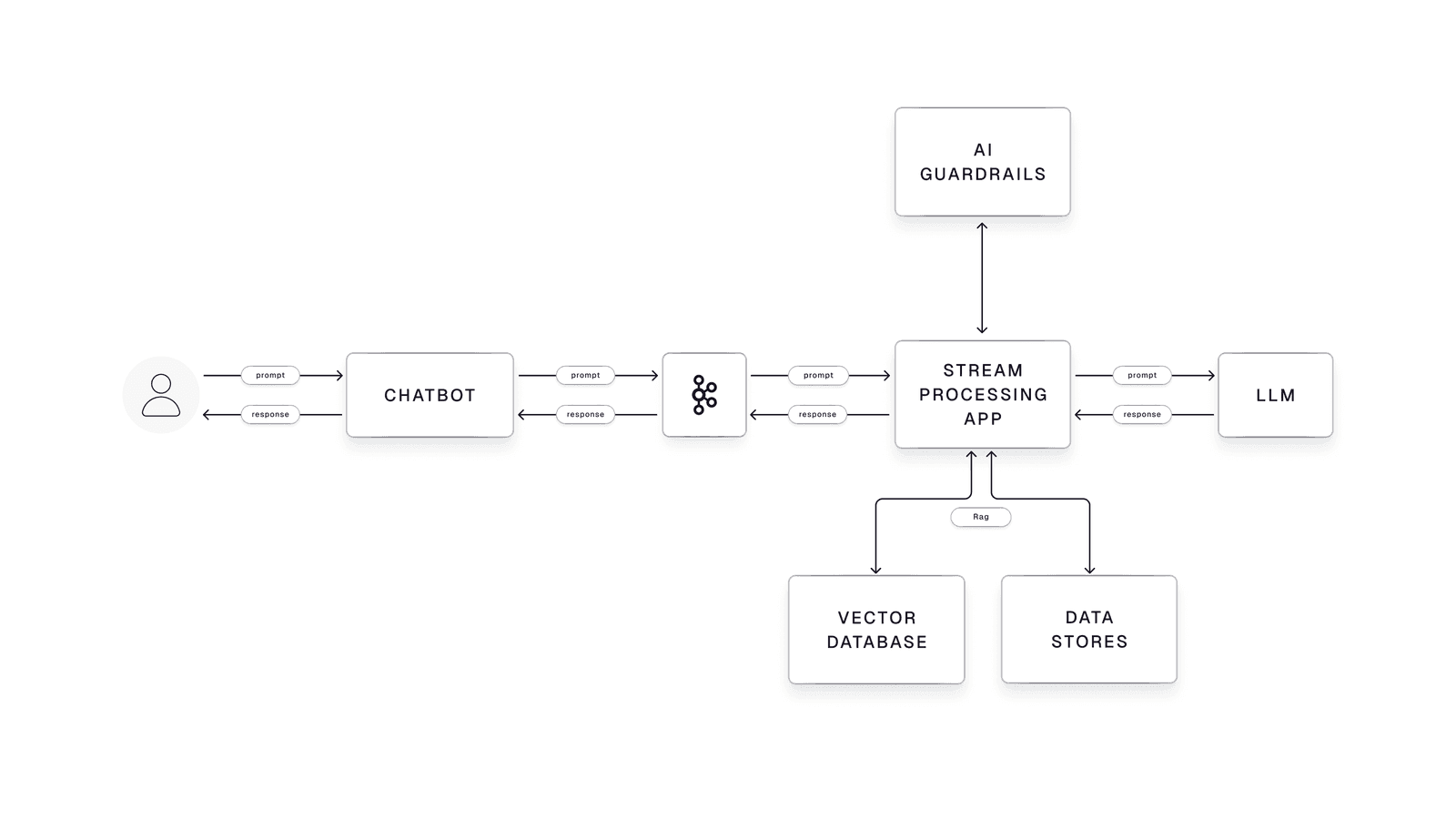

Solution: Kafka + RAG (Retrieval-Augmented Generation)

- RAG enables AI to pull real-time data before responding.

- Kafka is the backbone, constantly streaming fresh, validated data.

No Kafka? No real-time AI.

Why Kafka?

- Streams fresh data instantly to AI models

- Prevents AI from working with outdated or incomplete data

- Integrates easily with AI via APIs

- Ensures only high-quality, validated data enters AI processes

Without Kafka, AI is outdated, disconnected, and unreliable. With Kafka, AI is real-time, dynamic, and useful.

A typical streaming architecture combining Kafka, AI Guardrails, and RAG:

Gartner predicts that by 2025, organizations leveraging AI and automation will cut operational costs by up to 30%. But that's only true if their AI has access to fresh, high-quality data.

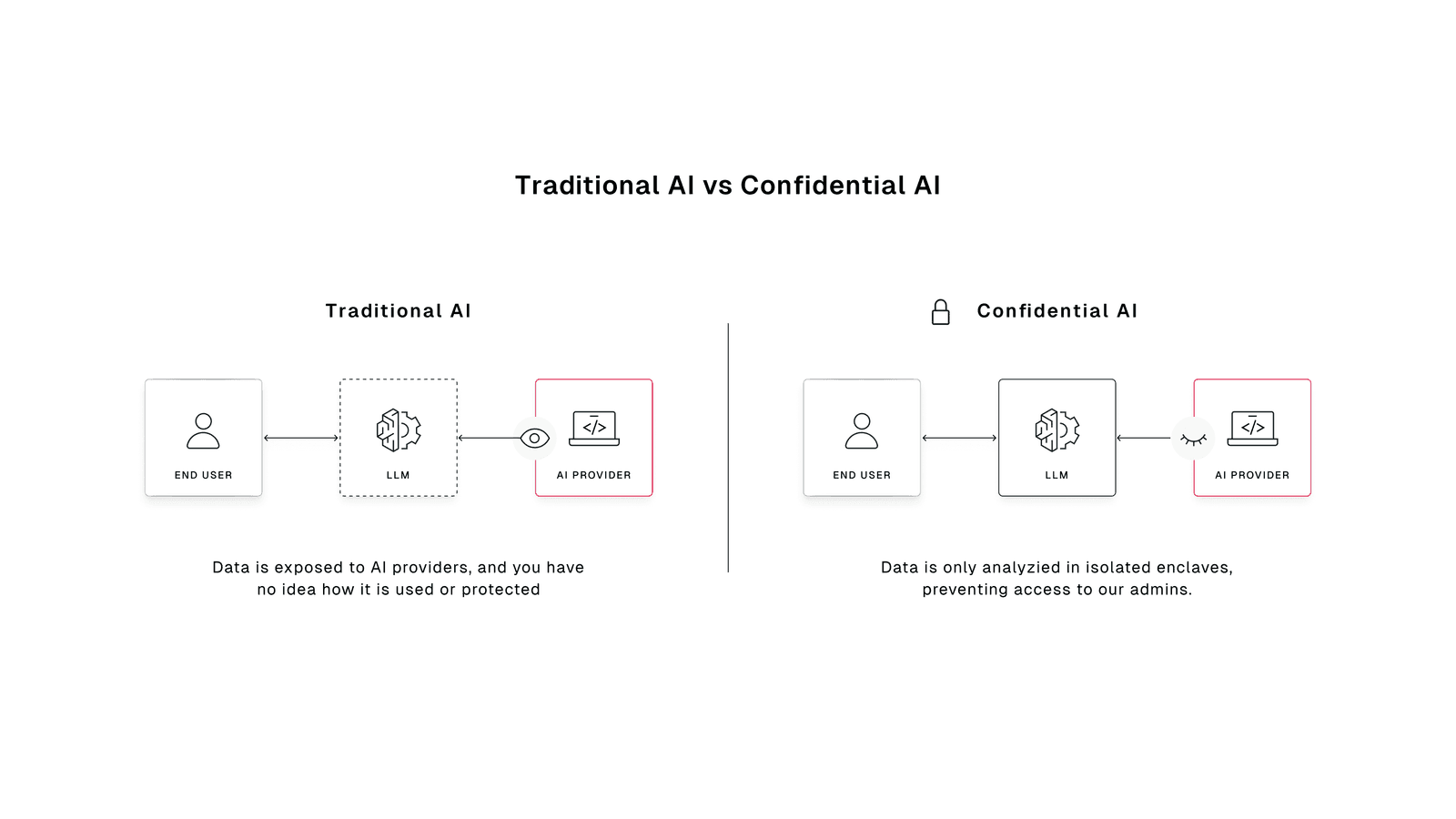

AI Sovereignty Determines Who Controls Your Data

If your AI infrastructure is hosted in the wrong place, your data isn't yours.

AI sovereignty isn't about software. It's about ownership. Who controls your AI? Who decides what happens to your data? If your AI runs on infrastructure outside your control, you're exposed to:

- Data access laws that allow governments to seize your data

- Jurisdictional risks (U.S., EU, China all have different AI regulations)

- Loss of control over how your AI models operate

How to Protect AI Sovereignty

- Use hardware-based security like SGX (Software Guard Extensions) and AMD SEV (Secure Encrypted Virtualization) to isolate sensitive workloads.

- Adopt Confidential AI solutions like Mithril Security, which ensures even the AI provider can't access the data.

- Choose infrastructure providers carefully. Not all cloud environments protect your AI assets equally.

Sovereignty isn't a luxury. Ignore it, and you're handing over control of your AI.

Source: Mithril Security

Two Requirements for Scaling AI Safely

To scale AI safely and effectively, two things are non-negotiable:

- Kafka for real-time, high-quality data

- AI Guardrails for governance, security, and compliance

AI is only as good as the data behind it. If that data is stale, biased, or insecure, AI can't be trusted.

- Without Kafka: AI is outdated and unreliable.

- Without Guardrails: AI is risky and dangerous.

- Without AI sovereignty: Your AI isn't even under your control.

Kafka + AI Guardrails = Smarter, Safer, Real-Time AI.