Why Kafka ACLs Break at Scale and What to Use Instead

Kafka's default security fails in production. ACLs become unmanageable, PII leaks, encryption is missing. Here's the fix.

Kafka ships with no security enabled. Most teams know this. What they underestimate is how quickly access control lists (ACLs) become unmanageable once you have more than a handful of topics and users.

Key points:

- Kafka's built-in ACLs work for small deployments but collapse under operational complexity at scale

- ACLs cannot protect Kafka Connect, Schema Registry, or control PII visibility

- Encryption must be added separately, and doing it wrong breaks downstream consumers

The Problem: ACLs Don't Scale

Kafka ACLs work fine for development and small production deployments. The problems start when you have dozens of teams, hundreds of topics, and thousands of consumer applications.

ACLs are verbose. Every user-topic-operation combination requires an explicit entry. Add a new team member? Update the ACL. Create a new topic? More ACL entries. Remove someone's access? Hope you find all the relevant rules.

The maintenance burden grows exponentially. Teams start granting overly broad permissions to avoid constant access requests. This defeats the purpose of access control entirely.

ACLs also have blind spots. They control access to topics and consumer groups but not to:

- Kafka Connect connectors

- Schema Registry subjects

- KSQL queries

- Cross-cluster replication

If your security model depends on ACLs alone, you have gaps.

Configuration Guardrails Prevent Mistakes

Kafka has hundreds of configuration options. Misconfiguration causes outages, data loss, and security holes.

The solution: don't let users configure Kafka directly. Place an abstraction layer between teams and the raw configuration. This layer enforces policies, validates settings, and catches errors before they reach production.

Producer configuration is a common failure point. Incorrect batch sizes cause performance problems. Wrong compression settings break consumers. Missing idempotence flags allow duplicates. A policy layer rejects invalid configurations before they cause incidents.

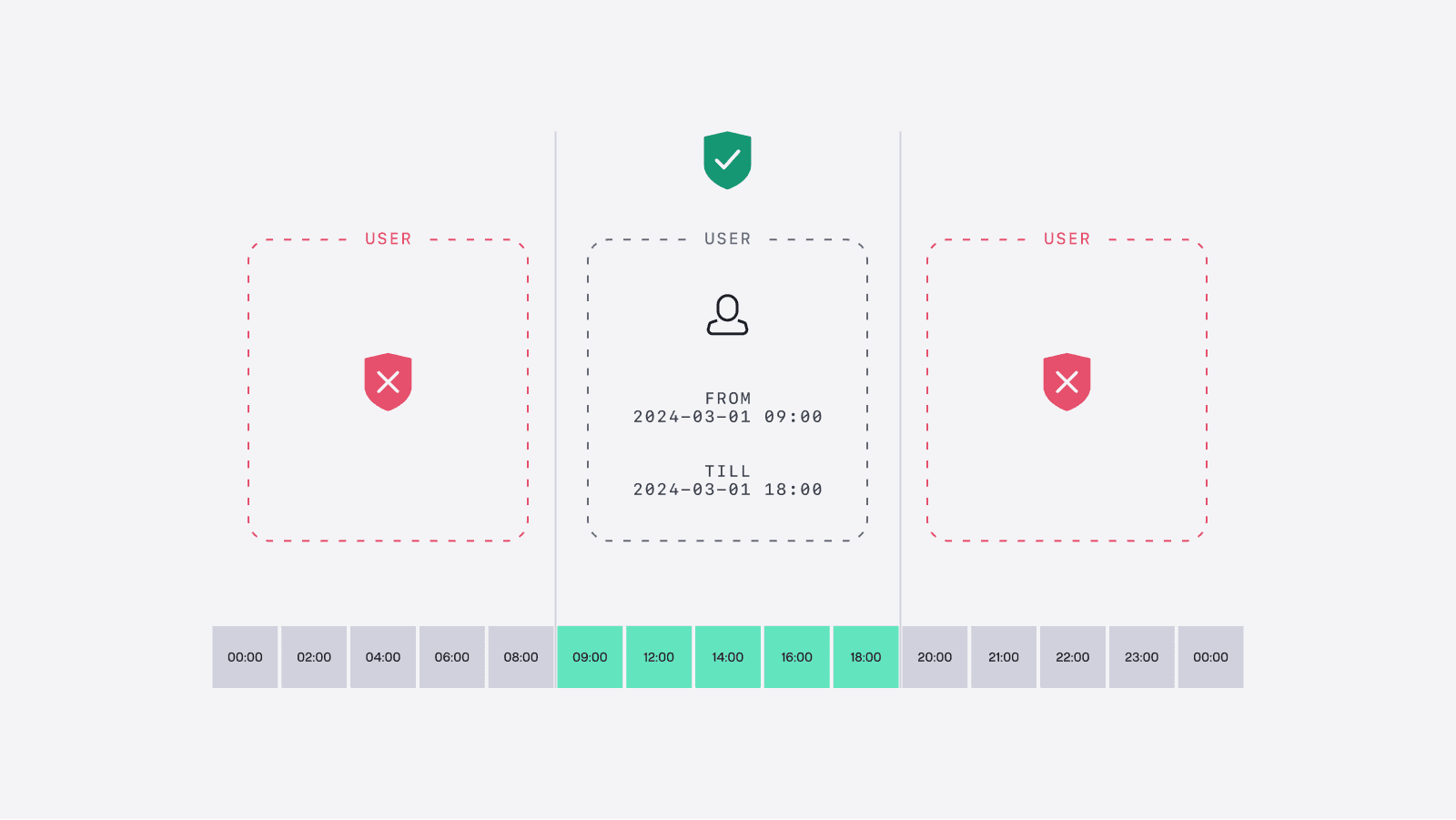

Time-Scoped Access for Debugging and Incidents

Permanent access is a security liability. Engineers need temporary elevated access for debugging, incident response, and one-time data migrations.

Standard ACLs have no concept of time limits. Access granted during an incident stays granted forever unless someone remembers to revoke it.

Implement time-scoped access that automatically expires. When an engineer needs to debug a production issue, grant access for 4 hours instead of permanently. The access disappears when the window closes.

This approach also creates an audit trail. You know who had access to what data and when, which matters for compliance with GDPR, HIPAA, and SOC 2.

PII Masking: Let Teams Work Without Exposing Sensitive Data

Development teams need realistic data to debug issues. They don't need to see actual social security numbers, credit card details, or medical records.

Field-level masking solves this. The underlying topic contains full PII, but consumers see masked versions based on their permissions. A support engineer might see john.d*@example.com while a data scientist sees [REDACTED] and only authorized systems see the actual value.

This works at the Kafka layer, not in application code. Every consumer doesn't need to implement masking logic. The data governance policy applies consistently regardless of which application reads the topic.

Encryption: Protect Data at Rest and in Transit

Kafka supports TLS for encryption in transit. Enable it. This prevents eavesdropping on network traffic between producers, brokers, and consumers.

Encryption at rest is harder. Kafka stores messages on disk in plaintext by default. If someone gains access to the broker's filesystem, they can read your data.

Two options exist:

- Disk-level encryption: Encrypt the underlying storage. Simple but doesn't protect against privileged broker access.

- Application-level encryption: Encrypt messages before producing them. The broker stores ciphertext it cannot decrypt.

Application-level encryption is stronger but introduces complexity. You need key management, rotation policies, and you must ensure consumers can decrypt what they need to read.

The critical constraint: encryption must not break downstream applications. If you encrypt a field that a consumer expects to filter on, that consumer stops working. Plan your encryption strategy with the full data flow in mind.

Data Sharing with External Parties

Sharing Kafka data with partners, vendors, or customers introduces new risks. You're exposing your internal data streams to systems you don't control.

Never share raw topics. Create dedicated topics for external consumers with:

- Only the fields they need (no extra PII)

- Transformed data (aggregated, anonymized, or filtered)

- Separate credentials with narrow permissions

- Rate limiting to prevent abuse

Monitor external consumption patterns. Unusual access patterns, such as reading historical data that should no longer be relevant, may indicate credential theft or policy violations.

Implementation Priority

Start with these steps in order:

- Enable TLS for all broker connections. This is table stakes.

- Replace direct ACL management with a policy layer that handles permissions programmatically.

- Implement time-scoped access for elevated permissions.

- Add field-level masking for topics containing PII.

- Evaluate encryption at rest based on your threat model and compliance requirements.

Kafka security is not a one-time project. Your requirements will change as you add topics, teams, and use cases. Build a foundation that can evolve rather than one that requires rework at every stage of growth.