Why Streaming Data Quality Requires Proactive Monitoring, Not Reactive Fixes

Master data quality in streaming with proactive monitoring, use-case checks, and real-time alerts. Build trust in data for AI and real-time decisions.

Real-time decision-making depends on data quality. The problem: most organizations still fix data issues after they cause damage, when streaming demands prevention.

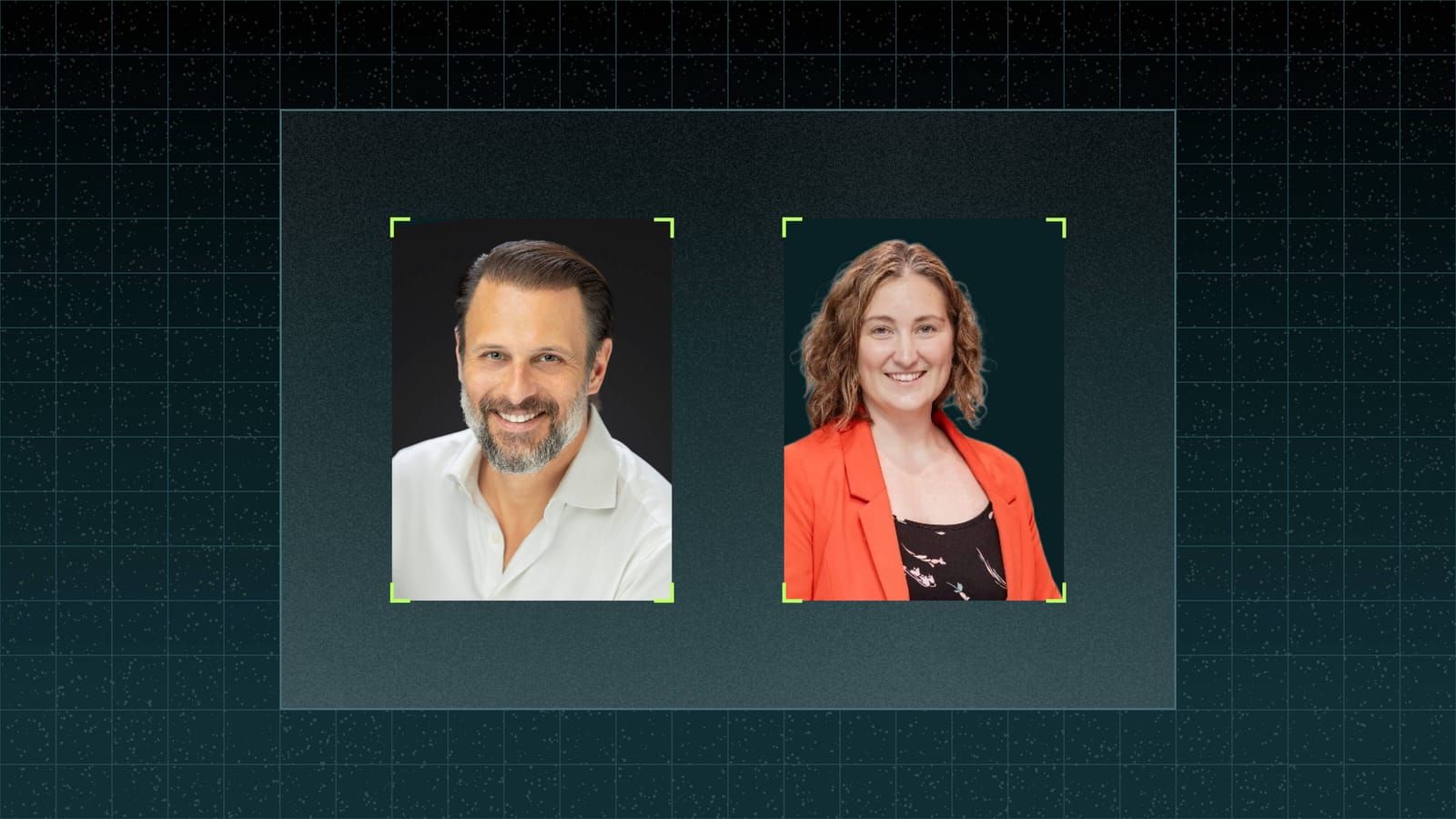

Frances O'Rafferty and I discussed these challenges in our webinar "From Chaos to Control." Here are the key strategies.

Stop Fixing Problems After They Happen

Traditional data quality focused on remediation. That worked for batch processing. It fails for high-velocity streaming.

Proactive management requires:

- Continuous observation: Monitor data as it flows, not after it lands.

- Use-case-driven checks: Quality rules must match each application's specific needs.

- Real-time alerts: Notifications must move at data speed, not report speed.

"Quality isn't about making sure data is correct," Frances noted. "It's about ensuring it meets the needs of the use case it's supporting."

How to Build Trust in Streaming Data

Trust enables confident decision-making, especially for AI and machine learning systems.

Three fundamentals:

- Transparency: Visible data lineage lets stakeholders trace origins and transformations.

- Consistency: Validation rules must align across systems to prevent discrepancies.

- Change communication: Explain why data changes to avoid confusion.

Poor data quality leads to incorrect AI outputs and costly operational failures. Trusted data enables faster decisions.

Treat Data Quality Debt Like Technical Debt

Unresolved data issues compound over time. A pragmatic approach:

- Prioritize critical data: Focus quality efforts where poor data impacts business outcomes.

- Identify root causes: Categorize issues (duplication, missing fields) to address their origins.

- Fix forward: Prevent new issues while remediating historical problems.

"If it's not impacting your business or insights, let it go," Frances advised. "Focus on what really matters."

Where Data Quality Is Heading

Frances predicted three trends:

- Use-case specificity: Quality standards tailored to different data consumers.

- Integrated automation: Quality checks embedded at every stage of the data lifecycle.

- Gamification: Leaderboards and hackathons to drive team engagement.

The goal: align people, processes, and technology to make data quality everyone's responsibility.

Next Steps

Watch the full webinar replay for deeper exploration.

Contact us to learn how Conduktor can help you achieve data quality at scale.