Audit Logging for Streaming Platforms

Audit logging is the practice of recording who did what, when, and where in a system. For streaming platforms like Apache Kafka, Apache Flink, or Pulsar, audit logging becomes both critical and challenging due to the distributed nature of these systems and the high volume of operations they handle.

As organizations adopt streaming platforms for mission-critical workloads, they face increasing pressure to demonstrate compliance with regulations like GDPR, HIPAA, SOC2, and PCI-DSS. Audit logs provide the paper trail needed to prove that data is handled correctly, access is controlled, and changes are tracked.

Why Audit Logging Matters for Streaming Platforms

Streaming platforms sit at the heart of modern data architectures, moving sensitive data between systems in real-time. A single misconfigured topic ACL or unauthorized schema change can expose private information or break downstream applications.

Audit logging serves several key purposes:

- Compliance and Regulatory Requirements: Many regulations require organizations to maintain detailed records of who accessed what data and when. For example, GDPR requires demonstrating that personal data is protected, while SOC2 mandates tracking of administrative changes.

- Security Incident Investigation: When a security breach occurs, audit logs help teams reconstruct what happened. Did someone delete a critical topic? Who modified the consumer group offsets? Which user updated the schema registry?

- Operational Troubleshooting: Beyond security, audit logs help debug production issues. If a consumer suddenly stops processing messages, audit logs can reveal whether someone changed the ACLs, modified the schema, or altered the topic configuration.

- Change Management: In large organizations, multiple teams may interact with the same streaming platform. Audit logs provide accountability and help track changes across team boundaries.

Unique Challenges in Streaming Environments

Unlike traditional databases with centralized audit logs, streaming platforms present unique challenges:

- Distributed Architecture: Kafka clusters consist of multiple brokers, ZooKeeper/KRaft nodes, Schema Registries, and Connect workers. Each component has its own logs and security model, making centralized auditing difficult.

- High Volume of Events: Streaming platforms process millions of messages per second. While you typically don't audit every message (that would be prohibitive), you do need to audit administrative operations, which can still generate substantial log volume.

- Multiple Access Patterns: Users interact with streaming platforms through various interfaces: CLI tools, REST APIs, web UIs, and programmatic clients. Each access path needs consistent audit logging.

- Immutability Concerns: Audit logs themselves must be tamper-proof. If someone can modify or delete audit logs, they lose their value for compliance and security.

What Should Be Audited

A comprehensive audit logging strategy for streaming platforms should capture several categories of events:

Administrative Operations

- Topic Management: Creating, deleting, or modifying topics (partition count, retention, compaction settings)

- ACL Changes: Granting or revoking permissions for users or service accounts

- Configuration Updates: Changes to broker configurations, quotas, or cluster settings

- User Management: Creating, modifying, or deleting service accounts and API keys

Schema Registry Events

- Schema Evolution: Registering new schemas, updating compatibility settings

- Schema Deletion: Removing schemas (soft or hard deletion)

- Access Control: Changes to schema-level permissions

Consumer Group Operations

- Offset Resets: Manual offset resets can cause duplicate processing or data loss

- Group Membership: Tracking which consumers join or leave groups

- Group Deletion: Removing consumer groups and their committed offsets

Connect and Stream Processing

- Connector Lifecycle: Creating, updating, pausing, or deleting connectors

- Task Failures: Failed tasks that might indicate configuration or permission issues

Implementation Patterns

Native Kafka Audit Logging

Apache Kafka itself provides limited built-in audit logging through authorizer plugins. The AclAuthorizer logs authorization decisions, but these logs are scattered across broker log files and can be difficult to aggregate and analyze.

For production environments, organizations typically implement custom authorizers that write audit events to a dedicated Kafka topic. This approach has several advantages:

Topic: audit-logs

Message: {

"timestamp": "2025-12-07T10:30:45Z",

"user": "data-eng-team",

"operation": "DELETE_TOPIC",

"resource": "customer-events",

"outcome": "SUCCESS",

"source_ip": "10.0.1.45"

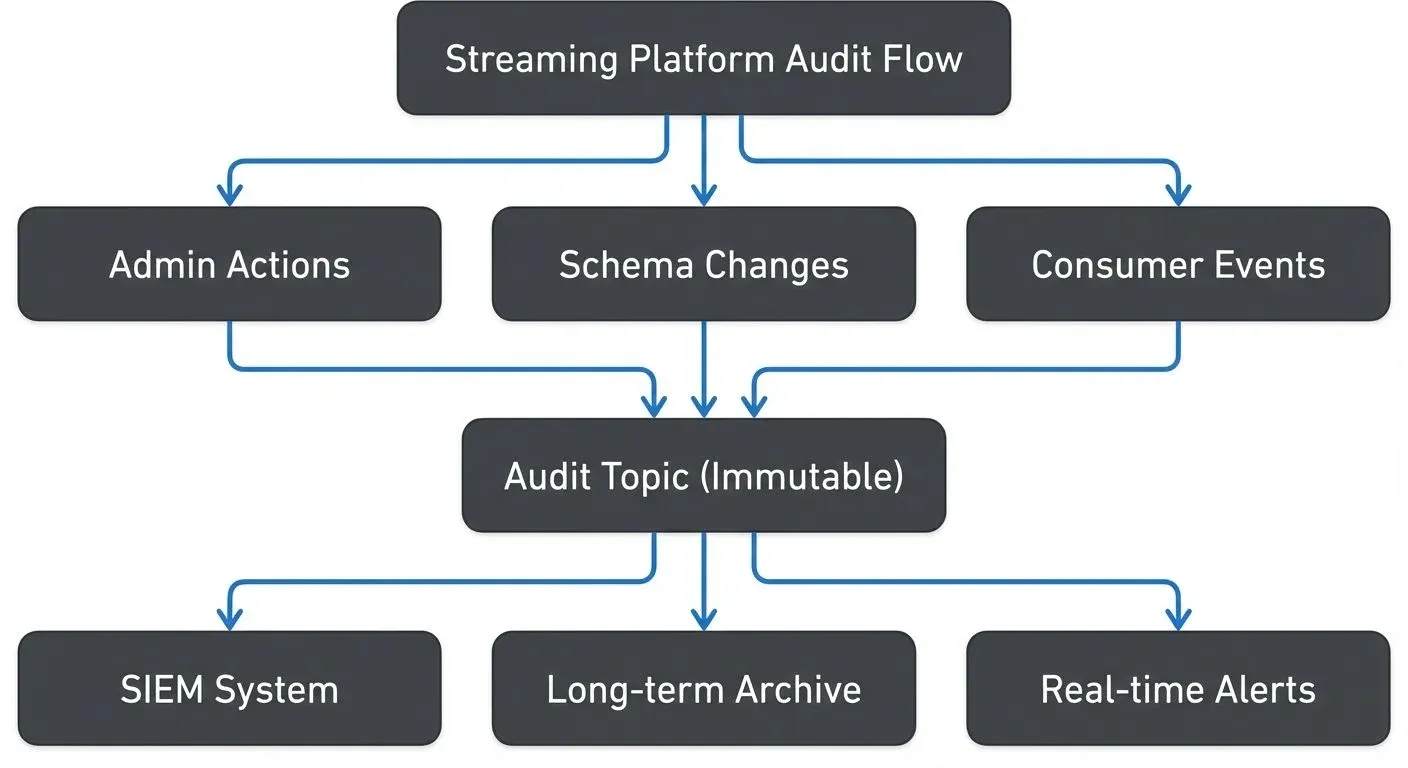

}By storing audit logs in Kafka itself, you leverage the platform's durability and retention capabilities. The audit topic can be consumed by SIEM systems, stored in long-term archival storage, or analyzed in real-time for suspicious activity.

Centralized Audit Collection

For environments with multiple Kafka clusters, Schema Registries, and Connect clusters, centralized audit collection becomes essential. This typically involves:

- Standardized Audit Format: Define a common schema for all audit events across components

- Central Audit Topic or Database: Aggregate logs from all sources into a queryable store

- Retention Policies: Implement long-term retention (often 7+ years for compliance)

- Access Controls: Protect audit logs with strict permissions to prevent tampering

Governance platforms provide built-in audit logging that captures operations across the entire Kafka ecosystem through a unified interface. When users create topics, modify schemas, or change ACLs, these actions are automatically logged with full context (who, what, when, why). This centralized approach simplifies compliance reporting and reduces the burden of implementing custom audit solutions.

Real-World Example: Schema Registry Audit Trail

Consider a financial services company using Kafka to stream transaction data. They use Avro schemas in Schema Registry to ensure data quality. Here's why audit logging matters:

A developer accidentally updates a schema with a breaking change, removing a required field. Within minutes, downstream applications start failing. Without audit logs, the team would need to manually review recent schema versions and guess who made the change.

With proper audit logging, the team immediately sees:

2025-12-07 14:23:18 | user: john.doe@company.com

operation: REGISTER_SCHEMA

subject: transactions-value

version: 12

compatibility: BACKWARD

result: SUCCESSThe team can contact John directly, understand the reasoning, and quickly roll back to version 11 while they fix the issue.

Best Practices

- Immutable Storage: Write audit logs to append-only storage or use Kafka topics with

retention.ms=-1(infinite retention) and strict ACLs preventing deletion. Note:delete.retention.msis a different setting used for tombstone retention in compacted topics, not for general retention. - Include Context: Capture not just the action, but the context: source IP, authentication method, API client version, and any relevant metadata.

- Separate Audit Traffic: Use dedicated topics or partitions for audit logs to prevent them from being mixed with application data.

- Monitor Audit Logs: Set up alerts for suspicious patterns like bulk deletions, privilege escalations, or access from unusual IP addresses.

- Regular Review: Compliance frameworks require periodic review of audit logs. Automate reports for security and compliance teams.

- Test Restoration: Verify that audit logs can be restored from backups. An audit log you can't retrieve during an investigation is worthless.

Integration with Compliance Frameworks

Different compliance frameworks have specific audit logging requirements:

- SOC2: Requires tracking all administrative changes, user access, and data modifications with timestamps and user attribution.

- GDPR: Mandates logging access to personal data, data deletions (right to be forgotten), and data exports (data portability). For comprehensive GDPR implementation guidance, see GDPR Compliance for Data Teams.

- HIPAA: Requires tracking all access to protected health information (PHI), including who viewed what data and when.

- PCI-DSS: Demands detailed logs of access to cardholder data environments, with secure storage and regular review.

Streaming platforms need to map their audit events to these framework requirements. Tracking what data flows where becomes essential, for comprehensive guidance on building this visibility, see Data Lineage Tracking: Data from Source to Consumption.

For example, a GDPR-compliant audit log might track:

- Which topics contain personal data (through metadata tagging)

- Who accessed those topics (consumer groups, connect workers)

- Data deletion operations (topic cleanup, compaction)

- Export operations (connector pulls for user data requests)

Summary

Audit logging is a fundamental requirement for operating streaming platforms in regulated environments. While Apache Kafka and related technologies provide some basic audit capabilities, production deployments typically require custom solutions or dedicated platforms to achieve comprehensive, centralized audit logging.

Key takeaways:

- Audit logs must capture administrative operations, schema changes, ACL modifications, and consumer group operations

- The distributed nature of streaming platforms makes centralized audit collection challenging but essential

- Audit logs should be immutable, context-rich, and stored with long retention periods

- Different compliance frameworks have specific requirements that must be mapped to streaming platform operations

- Governance platforms can simplify audit logging by providing unified audit trails across the entire Kafka ecosystem

By implementing robust audit logging, organizations gain the visibility needed for compliance, security incident response, and operational troubleshooting while building trust with regulators and customers.

Related Concepts

- Streaming Audit Logs - Implementing audit trails for real-time systems

- Policy Enforcement in Streaming - Automated governance with audit trails

- Data Governance Framework: Roles and Responsibilities - Organizational structure for audit compliance

Sources and References

- Apache Kafka Documentation - Security - Official documentation on Kafka authorization and security features

- Confluent - Audit Logs - Confluent's approach to audit logging in Confluent Platform and Confluent Cloud

- NIST Special Publication 800-92 - Guide to Computer Security Log Management - Comprehensive guide on security log management best practices

- SOC 2 Compliance Requirements - AICPA's framework for security, availability, and confidentiality controls

- GDPR Article 30 - Records of Processing Activities - Requirements for maintaining records of data processing

Written by Stéphane Derosiaux · Last updated February 11, 2026