Streaming Audit Logs: Traceability and Compliance for Real-Time Systems

In the world of data streaming, where information flows continuously at high velocity, maintaining a complete audit trail is essential for regulatory compliance, security investigations, and operational accountability. Streaming audit logs provide the critical traceability needed to answer fundamental questions: who accessed what data, when did they access it, and what operations did they perform?

As streaming platforms become central to enterprise data architectures, organizations face increasing pressure to demonstrate compliance with regulations like GDPR, SOX, HIPAA, and PCI-DSS. Unlike batch systems where audit trails can be reconstructed from transaction logs, streaming systems require purpose-built audit capabilities that can capture events in real-time without impacting performance. For broader coverage of authentication and authorization mechanisms that generate audit events, see Access Control for Streaming.

What to Audit in Streaming Systems

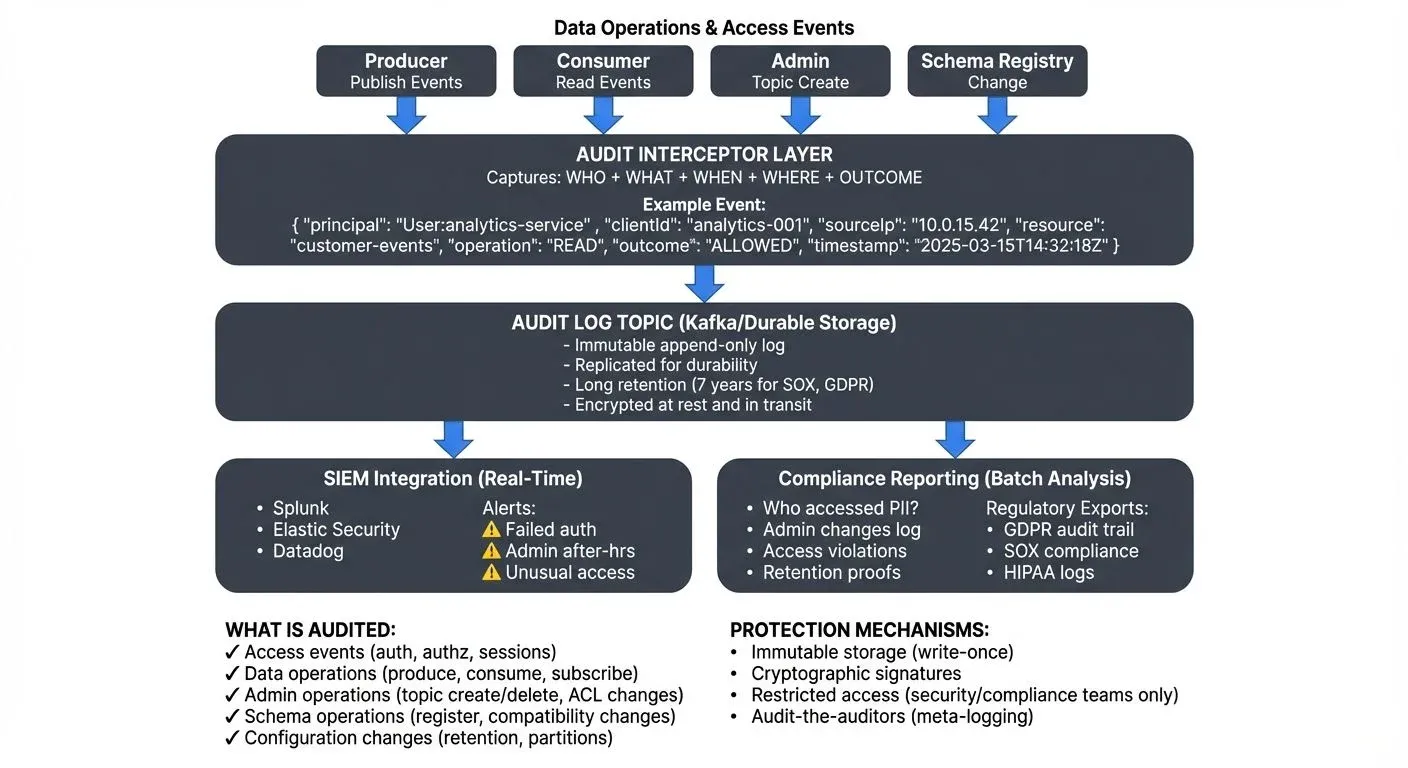

Comprehensive audit logging in streaming platforms must capture multiple categories of events. Access events form the foundation, recording every authentication attempt, authorization decision, and session establishment. When a client connects to a Kafka cluster, audit logs should capture the principal identity, source IP address, authentication method, and whether access was granted or denied.

- Data operations represent the core activity in streaming systems. Every message produced to a topic, every consumer group read, and every topic subscription should generate an audit event. These logs answer questions like "who read customer data from this topic?" or "which application wrote to this financial transactions stream?"

- Administrative operations have far-reaching implications and must be meticulously tracked. Topic creation and deletion, configuration changes, ACL modifications, and cluster management operations all require audit trails. A single misconfigured topic or altered ACL can expose sensitive data or disrupt critical services.

- Schema operations in systems using schema registries add another audit dimension. Schema registrations, compatibility changes, and schema deletions impact downstream consumers and data contracts. Audit logs provide the evidence trail needed to understand when breaking changes were introduced. For detailed coverage of schema evolution and governance, see Schema Registry and Schema Management.

Kafka's Audit Capabilities

Apache Kafka provides several mechanisms for audit logging, though the native capabilities require enhancement for enterprise needs. With Kafka 4.0+ and KRaft mode (Kafka Raft, which replaced ZooKeeper), the audit logging architecture has been streamlined, eliminating the need to audit ZooKeeper operations while centralizing all security decisions in the Kafka brokers themselves. For comprehensive coverage of KRaft architecture and migration, see Understanding KRaft Mode in Kafka.

The authorizer interface (a pluggable component that makes access control decisions) allows custom implementations that log every authorization decision. When a client attempts to read from a topic, the authorizer logs the principal identity, requested resource, operation type, and access decision, providing the "who tried to do what, and was it allowed?" foundation of audit trails.

Request logging at the broker level captures detailed information about client interactions. Kafka brokers can log metadata about produce and fetch requests, including timestamps, client IDs, and byte counts. However, this logging must be carefully configured to avoid overwhelming storage systems with high-volume metadata.

Modern Kafka distributions and enterprise platforms extend these capabilities with structured audit logs, machine-parseable formats like JSON or standardized schemas (such as CloudEvents or the Open Cybersecurity Schema Framework) that enable automated analysis and correlation. A comprehensive audit event includes:

- Who: Principal identity, client ID, source IP, authentication method

- What: Resource accessed (topic, consumer group, cluster config), operation type (read, write, alter)

- When: Timestamp with millisecond precision, session duration

- Where: Broker ID, cluster identifier, data center or region

- Outcome: Success, failure, authorization denied, error codes

Here's an example structured audit log in JSON format:

{

"eventId": "ae7f3c21-9b4e-4d2a-8f1c-5e6d7c8b9a0f",

"eventTime": "2025-03-15T14:32:18.742Z",

"eventType": "kafka.authorization.attempt",

"principal": "User:analytics-service",

"clientId": "analytics-consumer-prod-001",

"sourceIp": "10.0.15.42",

"authMethod": "SASL_SSL",

"resource": {

"type": "TOPIC",

"name": "customer-events",

"operation": "READ"

},

"broker": {

"id": 3,

"cluster": "production-us-east-1"

},

"outcome": "ALLOWED",

"metadata": {

"consumerGroup": "analytics-aggregator",

"partition": 7,

"offset": 1523847

}

}Custom audit interceptors can be implemented to capture application-level context, enriching logs with business metadata like transaction IDs or customer identifiers. In Kafka 4.0+, these interceptors integrate seamlessly with KRaft's unified security model.

Here's a simplified example of a custom Kafka authorizer that logs authorization decisions:

public class AuditingAuthorizer implements Authorizer {

private final Logger auditLogger = LoggerFactory.getLogger("kafka.audit");

private Authorizer delegateAuthorizer;

@Override

public void configure(Map<String, ?> configs) {

// Initialize delegate authorizer (e.g., AclAuthorizer)

delegateAuthorizer = new AclAuthorizer();

delegateAuthorizer.configure(configs);

}

@Override

public List<AuthorizationResult> authorize(

AuthorizableRequestContext requestContext,

List<Action> actions) {

List<AuthorizationResult> results = delegateAuthorizer.authorize(requestContext, actions);

// Log each authorization decision

for (int i = 0; i < actions.size(); i++) {

Action action = actions.get(i);

AuthorizationResult result = results.get(i);

AuditEvent event = AuditEvent.builder()

.eventTime(Instant.now())

.principal(requestContext.principal().getName())

.clientId(requestContext.clientId())

.clientAddress(requestContext.clientAddress().toString())

.resourceType(action.resourcePattern().resourceType())

.resourceName(action.resourcePattern().name())

.operation(action.operation())

.outcome(result == AuthorizationResult.ALLOWED ? "ALLOWED" : "DENIED")

.build();

// Write to structured audit log (JSON)

auditLogger.info(event.toJson());

}

return results;

}

}This authorizer wraps the standard ACL authorizer, logging every authorization decision in structured format while maintaining existing security policies.

Storage, Retention, and Analysis

Audit logs in streaming systems generate massive volumes of data, requiring sophisticated storage strategies. Log aggregation systems collect audit events from distributed brokers and channel them to centralized storage. Many organizations write audit logs to dedicated Kafka topics, leveraging the platform's own durability guarantees and replay capabilities.

- Retention policies must balance compliance requirements against storage costs. Financial regulations like SOX may require seven years of retention, while GDPR mandates the ability to delete individual records on request. Tiered storage architectures keep recent audit data in high-performance systems for active investigations while archiving historical logs to cost-effective object storage.

- Analysis patterns vary by use case. Security investigations require the ability to trace all actions by a specific principal or from a particular IP address. Compliance audits need reports showing who accessed sensitive data categories. Access reviews aggregate patterns to identify unusual behavior or excessive permissions.

- Integration with SIEM platforms (Security Information and Event Management) enables correlation with broader security events. When audit logs flow into tools like Splunk, Elastic Security, Datadog Security, AWS Security Hub, or Azure Sentinel, security teams can detect patterns like credential stuffing attacks, data exfiltration attempts, or insider threats. Many organizations use Kafka Connect connectors (such as the Splunk Kafka Connect, Elasticsearch Sink Connector, or HTTP Sink Connector) to stream audit logs directly to their SIEM platforms.

- OpenTelemetry integration has become standard practice in 2025 for unified observability. By instrumenting Kafka clients and brokers with OpenTelemetry, audit events can be correlated with traces, metrics, and logs across the entire distributed system. This provides end-to-end visibility from API gateway through streaming platform to downstream consumers, all with consistent correlation IDs and context propagation. For detailed coverage of tracing implementation, see Distributed Tracing for Kafka Applications.

- Real-time alerting adds proactive security capabilities. Rules can trigger alerts when administrative actions occur outside business hours, when production topics are accessed from development environments, or when authorization failures spike above threshold levels. Modern platforms increasingly use AI-powered anomaly detection to identify subtle patterns that rule-based systems might miss, such as unusual access patterns, privilege escalation attempts, or data exfiltration that mimics legitimate traffic. For comprehensive coverage of monitoring and observability practices including audit log analysis, see What is Data Observability: The Five Pillars.

Implementation Challenges and Solutions

The scale of streaming systems creates unique audit challenges. High-volume logging can generate millions of audit events per second in large deployments. Solutions include sampling strategies for routine operations while preserving full logging for sensitive resources, and batching audit events to reduce I/O overhead.

- Performance impact must be minimized. Synchronous audit logging that blocks operations can introduce unacceptable latency. Asynchronous logging with dedicated threads or separate processes maintains throughput while ensuring audit events are eventually persisted.

- Sensitive data in logs presents a paradox: audit logs must capture enough detail to be useful, but shouldn't expose the very data they're meant to protect. Techniques include logging metadata without message payloads, redacting sensitive fields, and encrypting audit logs at rest and in transit. For strategies on protecting sensitive data while maintaining visibility, see Data Masking and Anonymization for Streaming.

- Log protection is critical, audit logs themselves are high-value targets for attackers seeking to cover their tracks. Immutable storage, cryptographic signatures, and restricted access ensure audit trail integrity. Some organizations write audit logs to write-once storage or blockchain-based systems to prevent tampering. For comprehensive coverage of securing data throughout its lifecycle, see Encryption at Rest and in Transit for Kafka.

Regulatory Requirements

Different regulations impose specific audit logging requirements. GDPR mandates the ability to demonstrate lawful processing, requiring audit trails that show when personal data was accessed, by whom, and for what purpose. Organizations must also log data subject access requests, deletions, and consent changes.

- SOX compliance for financial reporting requires audit trails that establish data lineage and change control. Every modification to financial data streams must be traceable to an authorized user and business process.

- HIPAA for healthcare data demands detailed access logs showing who viewed protected health information. These logs must be readily available for patient requests and compliance audits.

- PCI-DSS for payment card data requires comprehensive logging of all access to cardholder data environments, with logs protected from modification and retained for at least one year.

Industry-specific regulations add further requirements. Financial services face requirements from FINRA and SEC, while government contractors must meet FISMA and FedRAMP audit standards.

Best Practices for Streaming Audit Logs

Implementing effective audit logging requires adherence to established best practices. Structured logging formats using JSON or standardized schemas enable automated parsing and analysis. Consistent field naming and taxonomies allow correlation across different systems and platforms.

- Time synchronization across distributed systems ensures accurate sequencing of events. NTP-synchronized clocks prevent scenarios where audit logs show effects before causes due to clock skew.

- Comprehensive coverage means auditing not just Kafka brokers but the entire streaming ecosystem: schema registries, Connect clusters, ksqlDB servers, and stream processing applications. Gaps in audit coverage create blind spots for security and compliance. For framework-level guidance on implementing comprehensive governance, see Data Governance Framework: Roles and Responsibilities.

- Access control for audit logs must be strictly managed. Only security and compliance personnel should have read access, with all access to audit logs themselves generating higher-level audit events, auditing the auditors. For modern security architectures that treat audit logging as a core component, see Zero Trust for Streaming.

- Regular review processes ensure audit logs fulfill their purpose. Periodic access reviews, automated anomaly detection, and compliance report generation turn raw audit data into actionable insights.

- Testing and validation verify that audit logging captures events correctly and completely. Regular drills that simulate security incidents or compliance audits validate the utility of audit trails.

- Cloud-native audit solutions integrate seamlessly with managed Kafka services. AWS CloudTrail automatically captures API calls to Amazon MSK (Managed Streaming for Apache Kafka), while Azure Monitor logs provide comprehensive audit trails for Azure Event Hubs. Google Cloud's Cloud Audit Logs track operations on Pub/Sub and Kafka on Google Kubernetes Engine. These cloud provider audit services complement application-level Kafka audit logging, providing defense-in-depth visibility.

Governance platforms like Conduktor provide enterprise-grade audit capabilities purpose-built for Kafka environments. Conduktor's audit logs offer centralized audit log collection, compliance reporting, and integration with security tools. Conduktor Gateway extends these capabilities by intercepting all Kafka traffic at the proxy layer, enabling:

- Field-level audit logging: Track access to specific message fields, not just topics

- Real-time data masking: Redact sensitive fields while maintaining audit trails of unmasked access

- Chaos engineering with audit: Test failure scenarios while maintaining complete audit trails (for chaos testing strategies, see Chaos Engineering for Streaming Systems)

- Policy enforcement: Block unauthorized operations before they reach brokers, with full audit logging

- Multi-cluster audit aggregation: Unified audit view across development, staging, and production clusters

Such platforms reduce the operational burden of building and maintaining custom audit infrastructure while ensuring comprehensive coverage that meets regulatory requirements.

Building a Culture of Accountability

Streaming audit logs do more than satisfy compliance checkboxes, they create organizational accountability. When every action is logged and traceable, teams develop greater awareness of their access to sensitive data. Audit trails enable learning from incidents, improving security posture, and demonstrating responsible data stewardship to customers and regulators.

As streaming platforms grow in importance, audit logging capabilities must scale in parallel. The investment in comprehensive, performant, and compliant audit logging pays dividends in reduced security risk, simplified compliance, and the confidence that comes from complete visibility into your streaming data infrastructure.

Related Concepts

- Audit Logging for Streaming Platforms - Core audit logging concepts and implementation

- Data Governance Framework: Roles and Responsibilities - Organizational structure supporting audit compliance

- Policy Enforcement in Streaming - Automated governance generating audit events

Sources and References

- Apache Kafka Security and Audit Logging - Official documentation on authorization and audit capabilities

- NIST SP 800-92: Guide to Computer Security Log Management - Federal guidelines for security log management

- GDPR Article 30: Records of Processing Activities - EU requirements for audit and processing records

- PCI DSS Requirement 10: Log and Monitor Access - Payment card industry audit logging requirements

- Splunk for Kafka Monitoring - SIEM integration for streaming audit logs

Written by Stéphane Derosiaux · Last updated February 11, 2026