Kafka Topics, Partitions, and Brokers: Core Architecture

Apache Kafka's architecture is built on three fundamental components: topics, partitions, and brokers. Understanding how these elements interact is essential for designing robust data streaming systems. This article explores each component and explains how they work together to deliver Kafka's core capabilities: scalability, fault tolerance, and high throughput.

Topics: The Logical Organization Layer

A topic in Kafka represents a logical stream of records. Think of it as a category or feed name to which producers publish data and from which consumers read data. Topics are append-only logs that store records in the order they arrive.

Each topic has a name (e.g., user-events, payment-transactions) and can handle different types of data. Topics are schema-agnostic at the broker level, though producers and consumers typically agree on a data format (JSON, Avro, Protobuf).

Key characteristics of topics:

- Append-only: Records are always added to the end of the log

- Immutable: Once written, records cannot be modified

- Retention-based: Records are retained based on time or size limits, not consumption

- Multi-subscriber: Multiple consumer groups can independently read the same topic

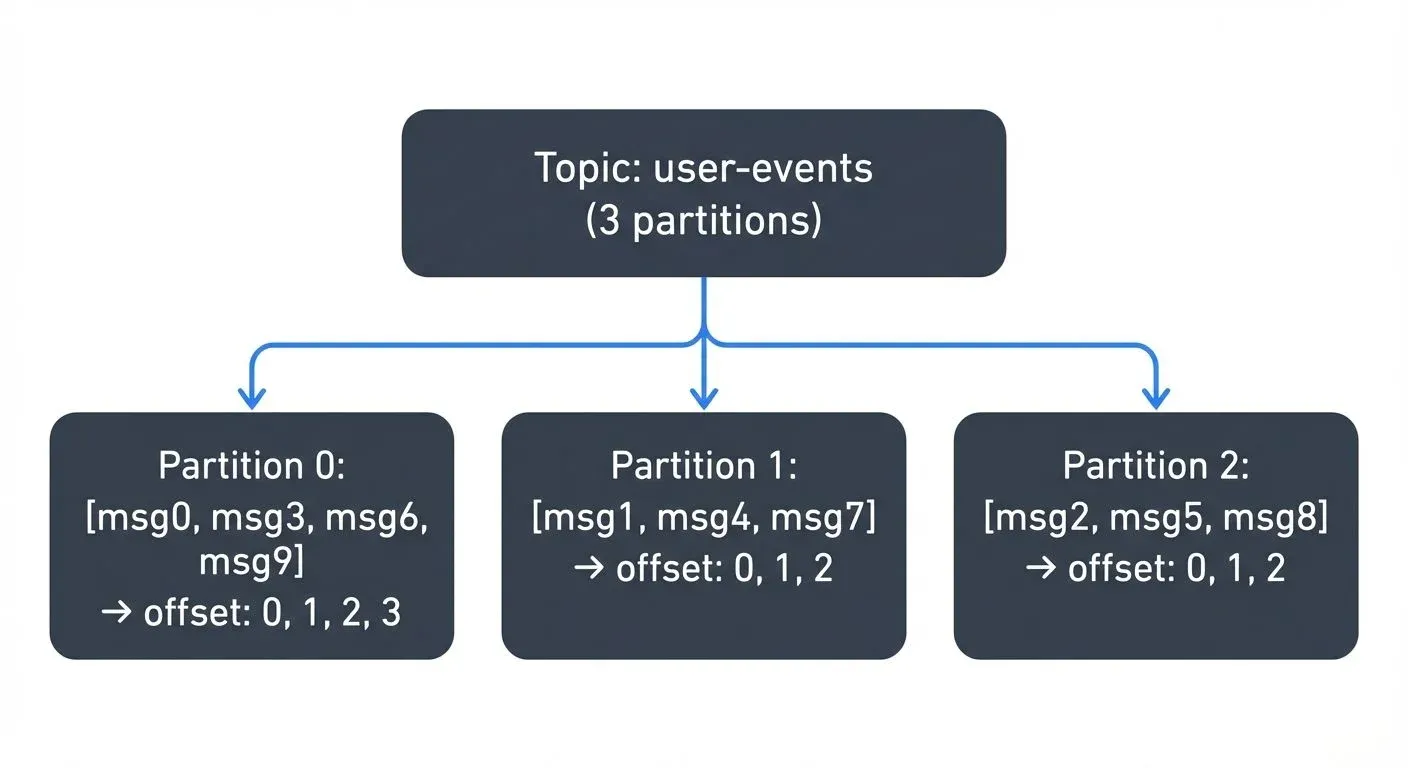

Partitions: The Scalability Mechanism

While topics provide logical organization, partitions enable Kafka's scalability. Each topic is split into one or more partitions, which are the fundamental unit of parallelism and distribution in Kafka.

How Partitions Work

Each partition is an ordered, immutable sequence of records. Records within a partition have a unique sequential ID called an offset. When a producer sends a message to a topic, Kafka assigns it to a specific partition based on:

- Explicit partition: Producer specifies the partition number

- Key-based routing: Hash of the message key determines the partition (guarantees ordering for the same key)

- Round-robin: If no key is provided, messages are distributed evenly

// Key-based routing example (Java)

ProducerRecord<String, String> record =

new ProducerRecord<>("user-events", "user-123", eventData);

// All messages with key _2_ go to the same partition

// Explicit partition

ProducerRecord<String, String> record =

new ProducerRecord<>("user-events", 2, "user-123", eventData);

// Forces message to partition 2

Partition Count Trade-offs

Choosing the right number of partitions involves several considerations:

More partitions enable:

- Higher parallelism (more consumers can read simultaneously)

- Better throughput distribution across brokers

- Finer-grained scaling

But too many partitions can cause:

- Longer leader election times during failures

- More memory overhead per partition

- Increased latency for end-to-end replication

- Higher metadata overhead in the controller

A common starting point: calculate partitions based on desired throughput divided by single-partition throughput, typically resulting in 6-12 partitions per topic for moderate-scale systems.

Example Calculation:

- Target throughput: 100 MB/s

- Single partition throughput: ~10 MB/s

- Recommended partitions: 100 / 10 = 10 partitions

Topic Creation Example:

# Kafka 4.0+ with KRaft

kafka-topics.sh --bootstrap-server localhost:9092 \

--create \

--topic user-events \

--partitions 10 \

--replication-factor 3 \

--config min.insync.replicas=2 \

--config retention.ms=604800000

# Verify topic configuration

kafka-topics.sh --bootstrap-server localhost:9092 \

--describe \

--topic user-eventsFor comprehensive topic planning strategies, see Kafka Topic Design Guidelines.

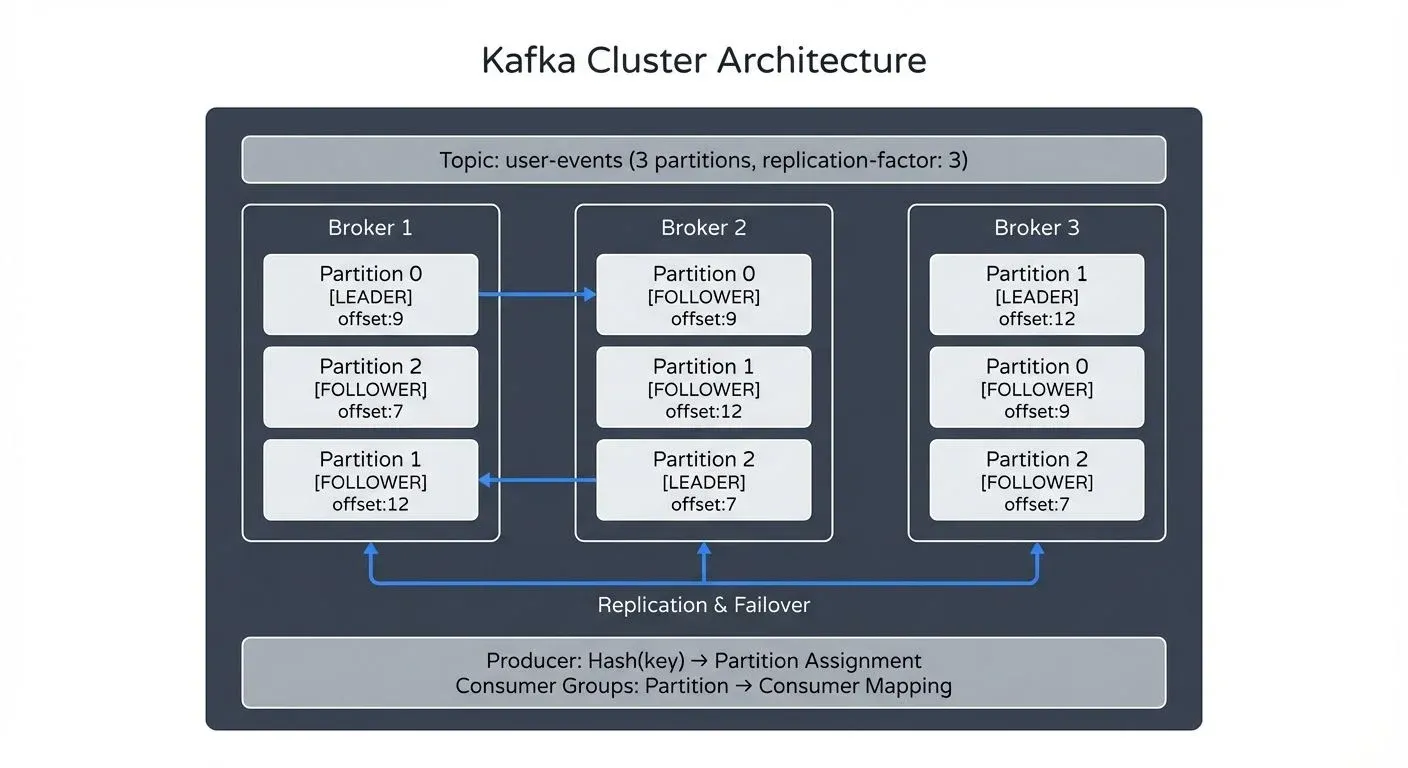

Brokers: The Storage and Coordination Layer

A broker is a Kafka server that stores data and serves client requests. A Kafka cluster consists of multiple brokers working together to distribute the load and provide fault tolerance.

Broker Responsibilities

Each broker in a cluster:

- Stores partition replicas assigned to it

- Handles read and write requests from producers and consumers

- Participates in partition leadership election

- Manages data retention and compaction

- Communicates with other brokers for replication

Brokers are identified by a unique integer ID. When you create a topic with multiple partitions, Kafka distributes partition replicas across available brokers.

Replication and Leadership

For fault tolerance, each partition is replicated across multiple brokers. One replica serves as the leader (handles all reads and writes), while others are followers (replicate data from the leader).

Topic: transactions (2 partitions, replication-factor: 3)

Partition 0:

Leader: Broker 1

Followers: Broker 2, Broker 3

Partition 1:

Leader: Broker 2

Followers: Broker 1, Broker 3If a broker fails, partitions where it was the leader automatically elect a new leader from the in-sync replicas (ISR) - replicas that have fully caught up with the leader's log and meet the replica.lag.time.max.ms threshold. This ensures continuous availability without data loss, as only synchronized replicas can become leaders.

Durability Configuration:

For critical data, configure min.insync.replicas (typically 2) to ensure writes are acknowledged only after being replicated to multiple brokers. Combined with producer setting acks=all, this guarantees no data loss even during broker failures.

For comprehensive replication strategies, see Kafka Replication and High Availability.

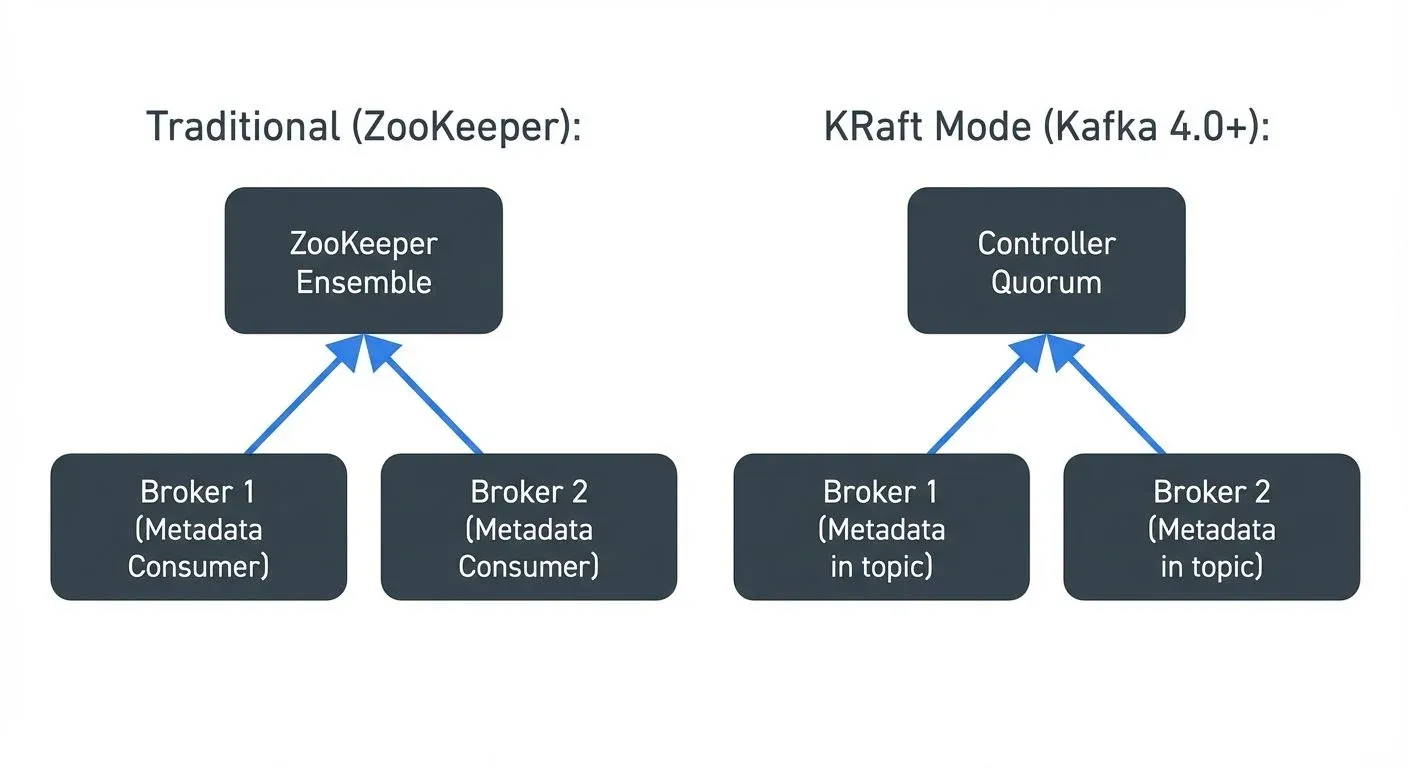

Metadata Management with KRaft (Kafka 4.0+)

Since Kafka 3.3 (GA in 3.5, required in 4.0+), clusters use KRaft (Kafka Raft) instead of ZooKeeper for metadata management. KRaft fundamentally changes how Kafka stores and manages cluster metadata.

How KRaft Works:

Instead of external ZooKeeper nodes, dedicated controller nodes (or combined broker-controller nodes) form a Raft quorum that manages metadata. Cluster state (topic configurations, partition assignments, broker registrations) is stored in an internal __cluster_metadata topic, treated as the source of truth.

KRaft Benefits:

- Faster failover: Controller election happens in milliseconds instead of seconds

- Scalability: Supports millions of partitions per cluster (vs. ZooKeeper's ~200K limit)

- Simplified operations: No external coordination service to maintain

- Stronger consistency: Raft consensus algorithm provides clearer guarantees

- Improved security: Unified authentication and authorization model

Architecture Impact: Architecture Impact:">

Architecture Impact:">

For in-depth coverage of KRaft architecture and migration strategies, see Understanding KRaft Mode in Kafka.

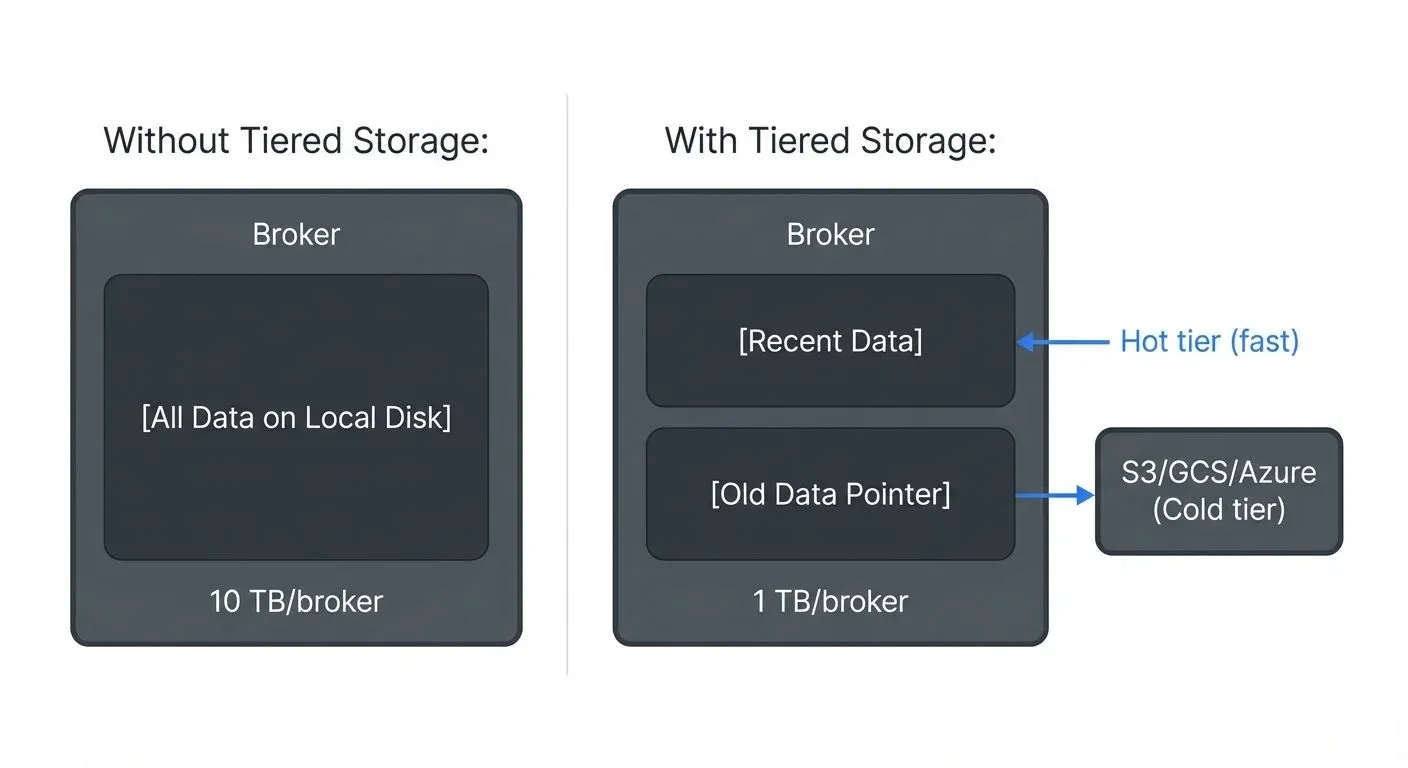

Tiered Storage Architecture (Kafka 3.6+)

Kafka 3.6+ introduced tiered storage, fundamentally changing how brokers manage data retention. This feature allows brokers to offload older log segments to remote object storage (S3, GCS, Azure Blob Storage) while keeping recent, frequently accessed data on local disks.

How Tiered Storage Works:

- Hot tier: Recent segments stored on broker local disk (fast access)

- Cold tier: Older segments archived to object storage (cost-efficient)

- Transparent consumption: Consumers automatically fetch from the appropriate tier

- Retention policy: Configure

local.retention.ms(hot) andretention.ms(total)

Architectural Benefits: Architectural Benefits:">

Architectural Benefits:">

Why It Matters:

- Decoupled storage: Retention no longer tied to disk capacity

- Cost reduction: Object storage is ~90% cheaper than broker disk

- Longer retention: Keep months or years of data without scaling brokers

- Faster recovery: New brokers don't need to replicate cold data

- Better elasticity: Scale compute (brokers) independently from storage

For detailed configuration and best practices, see Tiered Storage in Kafka.

How Components Work Together

The interaction between topics, partitions, and brokers creates Kafka's distributed log architecture:

This architecture enables:

- Horizontal scalability: Add brokers to increase storage and throughput

- Fault tolerance: Replica failover ensures availability

- Ordering guarantees: Per-partition ordering with key-based routing

- Independent consumption: Multiple consumer groups process data at their own pace

Kafka in the Data Streaming Ecosystem

Kafka's architecture makes it the central nervous system of modern data platforms. Topics serve as durable, replayable event streams that connect disparate systems:

- Stream processing: Frameworks like Kafka Streams and Flink consume from topics, transform data, and write to new topics

- Data integration: Kafka Connect uses topics as intermediaries between source and sink systems

- Event-driven microservices: Services publish domain events to topics and subscribe to events from other services

- Analytics pipelines: Data flows from operational topics into data lakes and warehouses

For foundational understanding of Kafka's role in data platforms, see Apache Kafka.

For complex deployments, tools like Conduktor provide visibility into topic configurations, partition distribution, and broker health. This governance layer helps teams understand data lineage, monitor consumer lag across partitions (see Consumer Lag Monitoring), and ensure replication factors meet compliance requirements. Conduktor enables centralized management of topics and brokers across multiple Kafka clusters, see the Topics Management and Brokers Management documentation for hands-on guidance.

Related Concepts:

- Producer and consumer client interactions: Kafka Producers and Consumers

- Message format and serialization: Message Serialization in Kafka

- Schema management: Schema Registry and Schema Management

- Security configurations: Kafka Security Best Practices

Related Concepts

- Kafka Replication and High Availability - Explores how replication across brokers ensures data durability and cluster resilience.

- Kafka Partitioning Strategies and Best Practices - Deep dive into choosing partition keys and sizing partition counts for optimal performance.

- Tiered Storage in Kafka - Modern approach to separating hot and cold data storage for cost-effective long-term retention.

Summary

Kafka's architecture is elegantly simple yet powerful. Topics provide logical organization, partitions enable horizontal scaling and parallelism, and brokers offer distributed storage with fault tolerance. With Kafka 4.0+, the shift to KRaft and tiered storage has modernized this architecture for cloud-native deployments.

When planning your Kafka deployment:

- Choose partition counts based on throughput requirements and parallelism needs (10-100 partitions for most use cases)

- Set replication factors (typically 3) to balance fault tolerance and resource usage

- Configure

min.insync.replicas=2with produceracks=allfor critical data - Distribute partition leadership across brokers to avoid hotspots

- Monitor ISR status to ensure replicas stay synchronized

- Adopt KRaft mode for new deployments (required in Kafka 4.0+)

- Consider tiered storage (Kafka 3.6+) for long retention windows to reduce costs

- Use rack awareness for partition placement in multi-AZ deployments

Modern Architecture Considerations (2025):

- KRaft eliminates operational complexity and improves scalability

- Tiered storage decouples storage costs from compute capacity

- Controller quorum sizing: 3-5 nodes for production KRaft clusters

- Partition leadership balancing: Leverage automatic rebalancing in Kafka 4.0+

Mastering these architectural fundamentals positions you to build scalable, reliable streaming platforms that form the backbone of modern data infrastructure.

Sources and References

- Apache Kafka 4.0 Documentation - Core Concepts

- Apache Kafka - KIP-500: Replace ZooKeeper with a Self-Managed Metadata Quorum

- Apache Kafka - KIP-405: Kafka Tiered Storage

- Apache Kafka - Topic and Partition Configuration

- Apache Kafka - Replication

- Kafka: The Definitive Guide, 2nd Edition - Chapter 5: Kafka Internals

- Conduktor - Kafka Architecture Guide (2025)

Written by Stéphane Derosiaux · Last updated February 11, 2026